AI is no longer restricted to your smartphone or PC, it’s now coming for visual effects in movies.

The technology is developing more rapidly than regulations can keep up with and, in 2023, that resulted in some of the creative guilds in Hollywood going on strike, with concerns over AI forming a large part of their lobbying. While VFX unions are yet to take strike-level action, AI is still an impending issue that could disrupt the entire post-production industry.

The VFX industry has faced many criticisms in recent years, particularly as audiences continue to buy into the belief that “no CGI” films are superior. Now the industry faces a new hurdle as the generative AI tools that many creatives have lobbied against are coming for their jobs.

But is AI something to be feared or embraced in visual effects and what regulations are essential to keep it in check? We spoke to three visual effects supervisors working in film, TV and screen to understand their stance on it.

Types of AI in VFX

Let’s be clear, AI isn’t new to the VFX industry. Theo Jones, Academy Award-nominated VFX supervisor at Framestore, told Gizmodo Australia over email certain AI tools are already in use in visual effects studios and have been for some time.

“You have highly targeted, predictive, machine learning tools trained on specific data sets of known provenance. These tools tend to have a clear relationship between the data they were trained on, the input of the artists and the resulting output.

“This category of tools has been in use in VFX for well over a decade and is very valuable in optimising specific processes and streamlining artists’ workflows so they can focus on the more creative aspects of the job where their talents are needed most,” Jones said.

It’s generative AI where things get tricky as it typically mines the existing work of artists to generate something new. The use of any generative AI in Hollywood is contentious. Disney copped flack for using AI to create the opening titles of the Marvel Studios series, Secret Invasion, causing the studio behind the sequence to reassure the use of AI did not cost artists jobs.

Any creative art in film and television (and its marketing) is analysed closely by audiences for any potential use of AI and then scrutinised heavily for doing such, which happened with the first promotional artwork for Prime Video’s Fallout. Sometimes audiences will completely boycott a project if it looks in AI’s direction. This is what Late Night with the Devil faced when it was revealed that three images had been generated with AI.

It seems to be the lack of transparency around generative AI that causes hesitation, and sometimes even disdain, towards the technology. It’s often not clear what data the models are being trained on in order to generate new content. That and, like with any unknown technology, there is resistance to the idea of change and an inherent fear that it may lead to the replacement of human jobs. This one of the reasons why the writers and actors went on strike in Hollywood last year, to ensure that their creative work and livelihoods would be safeguarded.

The limit does exist

This knee jerk fearful reaction to AI isn’t unwarranted. As speculative fiction has taught us, in the wrong hands the use of AI can lead to a whole range of problems. One such modern example is deep fakes.

While some of the more successful deep fakes are truly indiscernible (deep Tom Cruise, for instance, led to the creation of a whole new AI VFX studio), the technology as it stands right now still needs to be used in the hands of skilled artists to be successful.

“(Deep fakes) might be convincing, if you’re looking at it on your phone, but when you’re looking at it on an IMAX cinema screen, it’s quite different as to what will pass as convincing,” Sydney-based VFX supervisor and CG producer, David Wood said. “…Human faces are something we perceive better than anything else, even the slightest uncanny valley we pick up on and then you completely lose the illusion.”

Jones at Framestore said, “It is only in the last couple of years that the tooling has matured enough to be used in visual effects.

“And even then it is only truly effective when used in conjunction with a wide array of existing VFX tools and in the hands of really talented artists who are capable of working around the techniques limitations and compensating for its deficiencies.”

For generative AI to be truly useful in the VFX pipeline, it still needs a human at the wheel to drive.

“Because the domain [generative AI models] are targeting is so broad, i.e. creating an entire image or an entire video, and the input is generally natural language or language plus guide images, they lack the fine creative control required for VFX.

“In VFX we have developed, over many years, tools that allow artists to describe in great detail the performance, motion, lighting, etc, they want to create to satisfy the specific creative needs of the filmmakers. Current ‘generative AI’ tooling lacks any of this fine control,” Jones explained.

Anyone who has played around with generating an AI image or video will know that the input needed to get something exactly as envisioned is incredibly difficult and revising it to make small changes is even more difficult, often forcing the user to revert to square one each time. Therefore, a human element is still very much needed in this process for modification and communication purposes.

“[This job] requires a whole bunch of analysing input from different people and then evaluating it, averaging it, evaluating who’s more important to respond to,” Wood explained.

“Perhaps, before it goes to the client, the director will say, ‘I want this’ but the producer or production company might say ‘we feel that’s not in line with what the client might want.’ That VFX artist has to not only think about how to achieve all those things technically, they will have to consolidate and sometimes politically analyse. So for an AI to do that is very challenging.”

“There is currently Gen AI hype that exceeds the reality, but we are on the precipice of it exploding into something useful,” he added.

The future of AI in VFX

While it’s not quite there yet, the technology will get there eventually, but that doesn’t necessarily spell doom for artists in the VFX industry.

“We will continue to use targeted, predictive, AI tools for specific tasks, placed in the hands of talented artists, rather than seeing a mass adoption of generative AI tools that replace artists,” Jones said.

“The process of making a movie is an iterative creative endeavour that requires the input of many imaginative people at each stage to arrive at the final outcome. Generative AI tools are capable of incredible things but they lack granular control and without this fine control being put in the hands of experienced artists they are not capable of producing the vast majority of content that a VFX company is asked to produce.”

Jones added that one area where generative AI could be useful is early in the production process, where it’s capable of quickly translating a rough creative idea into imagery that can help convey a director’s vision.

Wood foresees the future of AI in VFX as a crossover between the two.

“Moving forward with AI, we’re going to have a fusion,” Wood said. “Maybe some shots will be AI, maybe some backgrounds or some characters will be AI. But there’ll also probably be VFX compositing as well… I see it as another tool in storytelling. A project doesn’t have to be either or, and I see a future where AI is integrated with traditional digital effects.”

In a similar way that the print industry evolved into the digital one, many industries are transitioning to AI, and the VFX industry is one of them. But with new technologies also comes the opportunity for new roles.

“[Those] technical developments didn’t kill skills, they allow greater flexibility and crossover, forming new skills…” Wood said.

“Technology will always change and in post production we are used to that at a rapid rate. Although AI is going to be a Brobdingnagian shift in most industries, content creators all have their speciality creative skills. AI is not going away, it’s going to get better, we need to adapt and embrace it as another tool.”

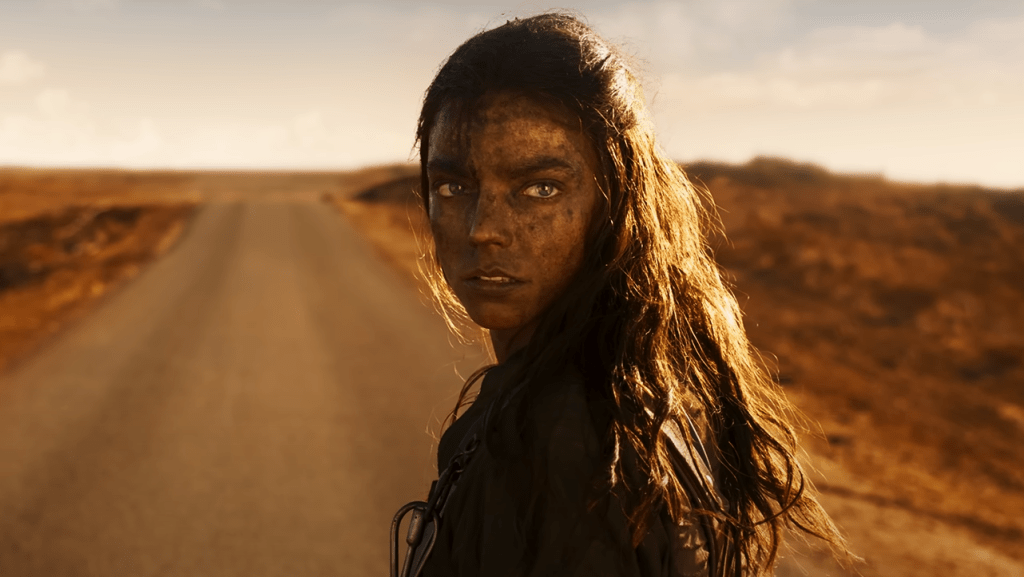

This evolution is already evident in the industry. The aforementioned VFX studio behind the Tom Cruise deepfakes, Metaphysic, was most recently responsible for merging the faces of Anya Taylor-Joy and Alyla Browne in Furiosa: A Mad Max Saga. Under Metaphysics’ section in the film’s credits was a whole range of job titles, ranging from traditional VFX supervisor and compositors, to newer titles like AI data operator, AI innovation lead and AI artists.

Regulation required

While job security is one thing, there are still legitimate concerns around the ethical and legal use of generative AI, which is still in need of proper regulation and compensation. Unfortunately, as technology is evolving so rapidly, there is still a fair bit of confusion around some of the longstanding processes in the VFX industry and what generative AI is capable of.

Erik Winquist, a VFX Supervisor at WETA who recently oversaw Kingdom of the Planet of the Apes spoke of his concerns about these misunderstandings, particularly that they may lead to hesitation from performers to participate in motion capture and digital scans.

“Essentially we take their performance and we map that onto a digital puppet of them, and then we transfer that from the puppet of them on to the puppet of the ape character they’re playing. The actor puppet allows us to at least verify that the facial performance is doing what we expect,” Winquist explained.

“If we can at least say that Owen Teague’s puppet looks just like what our team is doing as far as the emotional state of his face, then we can go forward with confidence that when we map it onto his character, we know that will be in a good state there. But that involves getting Owen and everybody else into the cyber scanning booth to get that.”

Winquist said he was worried that it might make it harder for the actors to come into the photo scanning booth.

“It’s a much bigger deal for them because that’s their likeness we’re talking about, so I understand the issues. But it was just like, I hope this isn’t going to exacerbate apprehensions that they may already have about being photographed,” he added.

As was evident from the guild strikes, the way forward comes in the form of proper regulations, an awareness of these new technologies in legal contracts, and transparency and trust between the performers and studios they are working with.

“It’s crucial to us that actors feel confident in any capture process and comfortable that their rights to control the use of that data are protected. Limiting the use of any data to the specific project it was captured for and having the rights to any other use reside with the actor is I think the only way of providing that confidence and therefore facilitating the type of trusted collaboration that is needed for us to produce great work,” Jones said.

Metaphysic is arguably at the forefront of this new AI and VFX frontier and has tried to ease any concerns by promoting a human-first approach. The company’s website states: “our mission is to develop, deploy and popularise proprietary and creative technology that users utilise to create AI-generated and hyperrealistic immersive content while they own and control their data.”

While the VFX industry is yet to escalate concerns to strike levels, it’s clear that AI protections need to be put in place for digital artists as well.

“Just as the actors have justifiably fought for their rights to control the use of their likeness and performance, so creators of imagery deserve to get compensated when their work is used to train AI models,” Jones said.

In late 2023, the International Alliance of Theatrical Stage Employees (IATSE)’s assistant department director, Vanessa Holtgrewe, urged U.S. Congress to protect entertainment workers’ intellectual property from AI piracy by establishing proper regulation around residual contracts for the training of AI with licensed works. In Australia, the Media, Entertainment & Arts Alliance made similar calls to the federal government, asking for new legislation to enhance the transparency of the data being used to train AI and to enforce workers’ rights to consent and be compensated.

“The regulations are still catching up with the technology and I hope we arrive at a place where any AI model needs to disclose the provenance of its training data and rightfully remunerate the people whose data has been used,” Jones said.

Image: Warner Bros/Disney+