What would you do if you saw a self-driving car hit a person?

In Robot Ethics, Mark Coeckelbergh, Professor of Philosophy of Media and Technology at the University of Vienna, posits a trolley problem for 2022: Should the car continue its course and kill five pedestrians, or divert its course and kill one?

In the chapter presented here, Coeckelbergh examines how humans have conceptualized of robots as a larger framework and plumbs how self-driving cars would handle lethal traffic situations — and whether that’s even a worthwhile question.

In the 2004 US science-fiction film I, Robot, humanoid robots serve humanity. Yet not all is going well. After an accident, a man is rescued from the sinking car by a robot, but a twelve-year-old girl is not saved. The robot calculated that the man had a higher chance of survival; humans may have made another choice. Later in the film, robots try to take over power from humans. The robots are controlled by an artificial intelligence (AI), VIKI, which decided that restraining human behaviour and killing some humans will ensure the survival of humanity. The film illustrates the fear that humanoid robots and AI are taking over the world. It also points to hypothetical ethical dilemmas should robots and AI reach general intelligence. But is this what robot ethics is and should be about?

Are the Robots Coming, or Are They Already Here?

Usually when people think about robots, the first image that comes to mind is a highly intelligent, humanlike robot.Often that image is derived from science fiction, where we find robots that look and behave more or less like humans.Many narratives warn about robots that take over; the fear is that they are no longer our servants but instead make us into their slaves. The very term “robot” means “forced labour” in Czech and appears in Karel Čapek’s play R.U.R., staged in Prague in 1921 — just over a hundred years ago. The play stands in a long history of stories about human-like rebelling machines, from Mary Shelley’s Frankenstein to films such as 2001: A Space Odyssey, Terminator, Blade Runner, and I, Robot. In the public imagination, robots are frequently the object of fear and fascination at the same time. We are afraid that they will take over, but at the same time it is exciting to think about creating an artificial being that is like us. Part of our romantic heritage, robots are projections of our dreams and nightmares about creating an artificial other.

First these robots are mainly scary; they are monsters and uncanny. But at the beginning of the twenty- first century, a different image of robots emerges in the West: the robot as companion, friend, and perhaps even partner. The idea is now that robots should not be confined to industrial factories or remote planets in space. In the contemporary imagination, they are liberated from their dirty slave work, and enter the home as pleasant, helpful, and sometimes sexy social partners you can talk to. In some films, they still ultimately rebel — think about Ex Machina, for example — but generally they become what robot designers call “social robots.” They are designed for “natural” human- robot interaction — that is, interaction in the way that we are used to interacting with other humans or pets. They are designed to be not scary or monstrous but instead cute, helpful, entertaining, funny, and seductive.

This brings us to real life. The robots are not coming; they are already here. But they are not quite like the robots we meet in science fiction. They are not like Frankenstein’s monster or the Terminator. They are industrial robots and, sometimes, “social robots.” The latter are not as intelligent as humans or their science- fiction kin, though, and often do not have a human shape. Even sex robots are not as smart or conversationally capable as the robot depicted in Ex Machina. In spite of recent developments in AI, most robots are not humanlike in any sense. That said, robots are here, and they are here to stay. They are more intelligent and more capable of autonomous functioning than before.And there are more real- world applications. Robots are not only used in industry but also health care, transportation, and home assistance.

Often this makes the lives of humans easier. Yet there are problems too. Some robots may be dangerous indeed — not because they will try to kill or seduce you (although “killer drones” and sex robots are also on the menu of robot ethics), but usually for more mundane reasons such as because they may take your job, may deceive you into thinking that they are a person, and can cause accidents when you use them as a taxi. Such fears are not science fiction; they concern the near future. More generally, since the impact of nuclear, digital, and other technologies on our lives and planet, there is a growing awareness and recognition that technologies are making fundamental changes to our lives, societies, and environment, and therefore we better think more, and more critically, about their use and development. There is a sense of urgency: we better understand and evaluate technologies now, before it is too late — that is, before they have impacts nobody wants. This argument can also be made for the development and use of robotics: let us consider the ethical issues raised by robots and their use at the stage of development rather than after the fact.

Self-Driving Cars, Moral Agency, and Responsibility

Imagine a self- driving car drives at high speed through a narrow lane. Children are playing on the street. The car has two options: either it avoids the children and drives into a wall, probably killing the sole human passenger, or it continues its path and brakes, but probably too late to save the life of the children. What should the car do? What will cars do? How should the car be programmed?

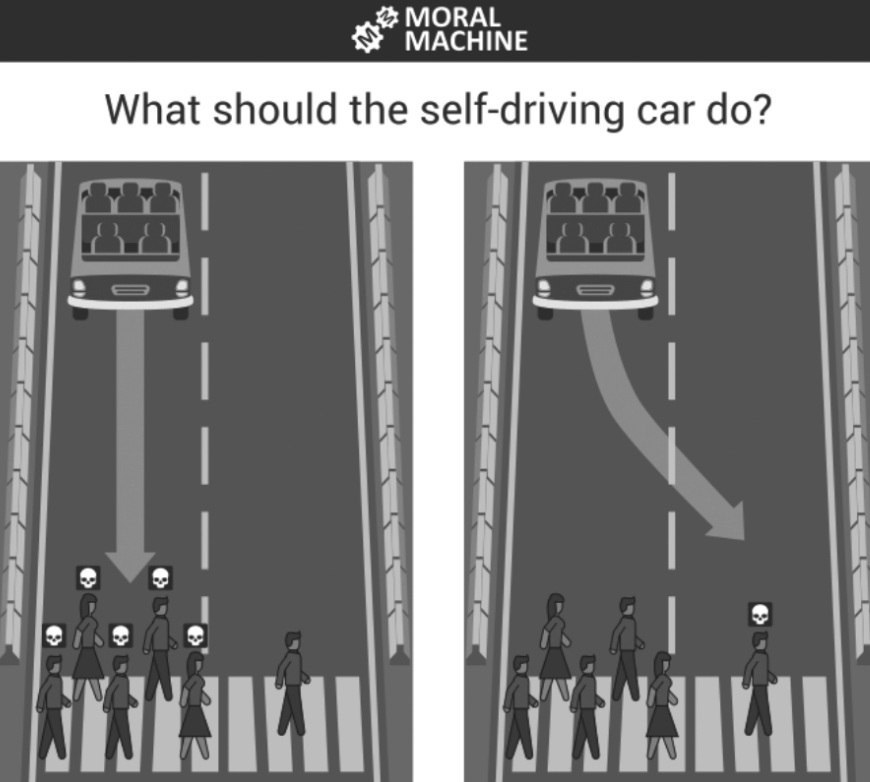

This thought experiment is an example of a so-called trolley dilemma. A runway trolley is about to drive over five people tied to a track. You are standing by the track and can pull a lever that redirects the trolley onto another track, where one person is tied up. Do you pull the lever? If you do nothing, five people will be killed. If you pull the lever, one person will be killed. This type of dilemma is often used to make people think about what are perceived as the moral dilemmas raised by self-driving cars. The idea is that such data could then help machines decide.

For instance, the Moral Machine online platform has gathered millions of decisions from users worldwide about their moral preferences in cases when a driver must choose “the lesser of two evils.” People were asked if a self- driving car should prioritise humans over pets, passengers over pedestrians, women over men, and so on. Interestingly, there are cross-cultural differences with regard to the choices made. Some cultures such as Japan and China, say, were less likely to spare the young over the old, whereas other cultures such as the United Kingdom and United States were more likely to spare the young. This experiment thus not only offers a way to approach the ethics of machines but also raises the more general question of how to take into account cultural differences in robotics and automation.

Figure 3 shows an example of a trolley dilemma situation: Should the car continue its course and kill five pedestrians, or divert its course and kill one?Applying the trolley dilemma to the case of self-driving cars may not be the best way of thinking about the ethics of self- driving cars; luckily, we rarely encounter such situations in traffic, or the challenges may be more complicated and not involve binary choices, and this problem definition reflects a specific normative approach to ethics (consequentialism, and in particular utilitarianism). There is discussion in the literature about the extent to which trolley dilemmas represent the actual ethical challenges. Nevertheless, trolley dilemmas are often used as an illustration of the idea that when robots get more autonomous, we have to think about the question of whether or not to give them some kind of morality (if that can be avoided at all), and if so, what kind of morality. Moreover, autonomous robots raise questions regarding moral responsibility. Consider the self-driving car again.

In March 2018, a self- driving Uber car killed a pedestrian in Tempe, Arizona. There was an operator in the car, but at the time of the accident the car was in autonomous mode. The pedestrian was walking outside the crosswalk.The Volvo SUV did not slow down as it approached the woman. This is not the only fatal crash reported. In 2016, for instance, a Tesla Model S car in autopilot mode failed to detect a large truck and trailer crossing the highway, and hit the trailer, killing the Tesla driver. To many observers, such accidents show not only the limitations of present-day technological development (currently it doesn’t look like the cars are ready to participate in traffic) and the need for regulation; they raise challenges with regard to the attribution of responsibility. Consider the Uber case. Who is responsible for the accident? The car cannot take responsibility. But the human parties involved can all potentially be responsible: the company Uber, which employs a cart hat is not ready for the road yet; the car manufacturerVolvo, which failed to develop a safe car; the operator in the car who did not react on time to stop the vehicle; the pedestrian who was not walking inside the crosswalk; and the regulators (e.g., the state of Arizona) that allowed this car to be tested on the road. How are we to attribute and distribute responsibility given that the car was driving autonomously and so many parties were involved? How are we to attribute responsibility in all kinds of autonomous robot cases, and how are we to deal with this issue as a profession (e.g., engineers), company, and society — preferably proactively before accidents happen?

Some Questions Concerning Autonomous Robots

As the Uber accident illustrates, self- driving cars are not entirely science fiction. They are being tested on the road, and car manufacturers are developing them. For example,Tesla, BMW, and Mercedes already test autonomous vehicles. Many of these cars are not fully autonomous yet, but things are moving in that direction. And cars are not the only autonomous and intelligent robots around. Consider again autonomous robots in homes and hospitals.

What if they harm people? How can this be avoided? And should they actively protect humans from harm? What if they have to make ethical choices? Do they have the capacity to make such choices? Moreover, some robots are developed in order to kill (see chapter 7 on military robots). If they choose their target autonomously, could they do so in an ethical way (assuming, for the sake of argument, that we allow such robots to kill at all)? What kind of ethics should they use? Can robots have an ethics at all? With regard to autonomous robots in general, the question is if they need some kind of morality, and if this is possible (if we can and should have “moral machines”). Can they have moral agency? What is moral agency? And can robots be responsible? Who or what is and should be responsible if something goes wrong?

Adapted from Robot Ethics by Mark Coeckelbergh. Copyright 2022. Used with Permission from The MIT Press.