A few weeks ago, I got to try the Apple Vision Pro, and it was everything that VR shills had been telling us VR should be for years. Using it was one of those “ooohhh” moments where the purpose of something finally clicked. One of the things that that made the Vision Pro special is that all of it just made sense. Aside from adjusting to making a few new gestures to select things, all of it seemed familiar because of how much it has its roots in other Apple products. My theory is that a lot of the R&D from other devices and departments was shared with Vision Pro and vice versa to pave the way for the product over the last few years.

Here are three examples of how R&D or UI from other devices make the Apple Vision Pro as good as it is (based purely on my own experiences).

Spatial Audio on Apple Vision Pro

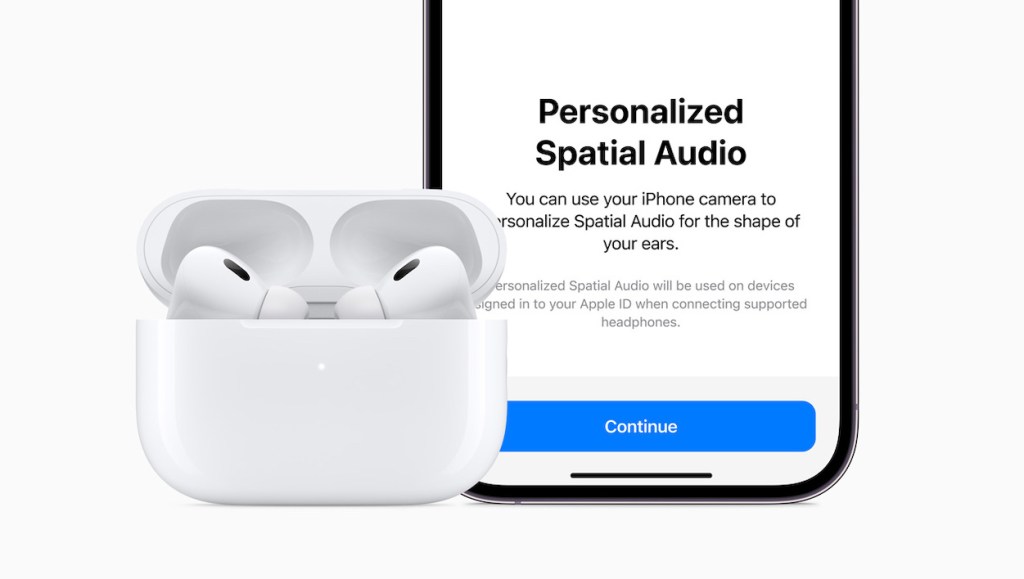

Spatial Audio is what makes the whole effect of the Vision Pro work. It’s what makes the experience so immersive. Other VR headsets focus on the visuals and then tell you to use any old headset, without acknowledging on the importance of audio for immersion. It wasn’t just seeing the butterfly fly in and land on my finger that made me feel it, it was the subtlety and realism of the audio. Try watching a TV show or movie without the music, and you’ll see how key audio is to manipulating your emotions and immersing you in the action.

Apple has spent a huge amount of time, money and effort on Spatial Audio R&D. In the last few years it’s become the centrepiece of Apple Music, premium AirPods, and even the big HomePod.

Apple didn’t invent object-based audio, but the company was the only one to really hammer home the Spatial Audio technology where the headphones know where they are in relation to your device – turn your head away from the TV or your iPad while watching a movie, and the audio stays where it is. That’s a neat gimmick when watching a movie on a plane, but it’s not really a game changer in that scenario. That’s not functionality your AirPods and iPad need to have. However, that functionality is extremely important for the Vision Pro, and it’s some Vision Pro R&D that can be applied to other devices.

Apple has also spent a lot of time and effort educating the public about the power of Spatial Audio, and getting that ‘Spatial’ term and its meaning embedded in the minds of users. Now the company is calling the Vision Pro ‘Spatial Computing’, and you immediately kinda get what that means, whereas a few years ago it would have been a meaningless term that required a lot of further explanation. It’s still just a somewhat meaningless buzzword, but now you understand the intention.

Apple Vision Pro has a Digital Crown

Whenever I needed to physically interact with the headset (like going back to the home screen, which incidentally looked like a cross between an Apple TV and an iPad home screen), I would reach up and press/scroll using the Digital Crown. You might remember the Digital Crown from such other devices as the Apple Watch, and later the AirPods Max. That kind of dial isn’t something I’ve come across much on other VR headsets, but it felt completely natural because I’m so used to adjusting the volume on the AirPods Max. It’s a little thing, but it’s shared so well across other well-known Apple devices that you can really see the shared R&D budget that probably made an accountant somewhere really happy.

The headband adjuster also looks like the Digital Crown. It’s a Digital Crown party, really.

LiDAR sensors

At WWDC 2019, augmented reality was all the rage. Apple was touting Reality Kit and Reality Composer, which would help developers make more immersive AR apps. Apple was pushing hard how much of a technical marvel it was that developers would be able to place AR objects behind or in front of people and physical objects.

Incidentally, the Vision Pro has supposedly been in development since 2018. Mixed reality (VR and AR) is its bread and butter, drawing on the AR apps from the App Store (as well as just regular apps), and needs to have objects placed realistically around the room for the effect to work.

In March 2020, the iPad Pro got a LiDAR sensor, which is a pretty advanced sensor that, previously, had only really been used in professional settings to measure distances using light. It was an unusual inclusion, given device-based AR still hasn’t really taken off. Though, the LiDAR sensor is a game changer for accessibility, particularly for blind and low vision users.

It’s also interesting that Apple continued to include it in the Pro versions of the iPhone, long after Samsung stopped including the Time of Flight sensor on the Galaxy S series.

The LiDAR sensor is the technology the Vision Pro relies on to produce accurate and realistic AR experiences. While Apple could just have added it to the phones because some Android phones were starting to get LiDAR shortly before the iPad Pro did, the timing and retention suggests it had more to do with the Vision Pro. Or maybe there’s just a huge community of people really dedicated to LiDAR that we don’t hear about. But my money is on LiDAR being good for accessibility and Vision Pro R&D.