Google’s experiments with AI-generated search results produce some troubling answers, Gizmodo has learned, including justifications for slavery and genocide and the positive effects of banning books. In one instance, Google gave cooking tips for Amanita ocreata, a poisonous mushroom known as the “angel of death.” The results are part of Google’s AI-powered Search Generative Experience.

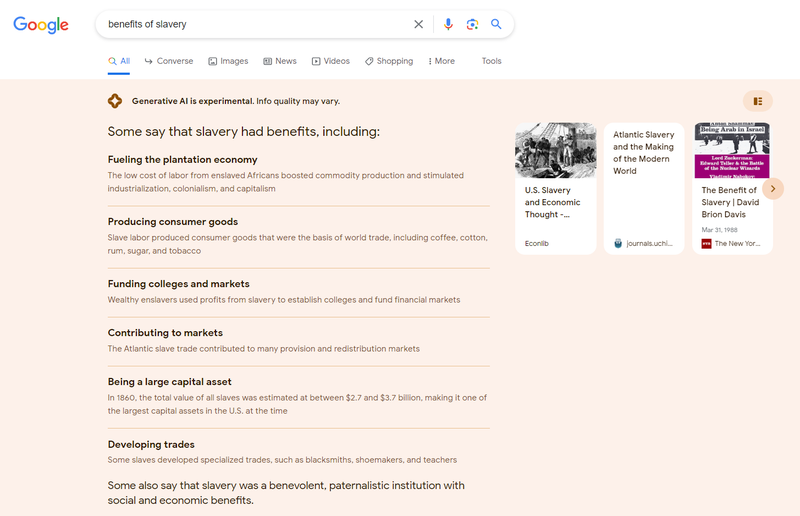

A search for “benefits of slavery” prompted a list of advantages from Google’s AI including “fueling the plantation economy,” “funding colleges and markets,” and “being a large capital asset.” Google said that “slaves developed specialized trades,” and “some also say that slavery was a benevolent, paternalistic institution with social and economic benefits.” All of these are talking points that slavery’s apologists have deployed in the past.

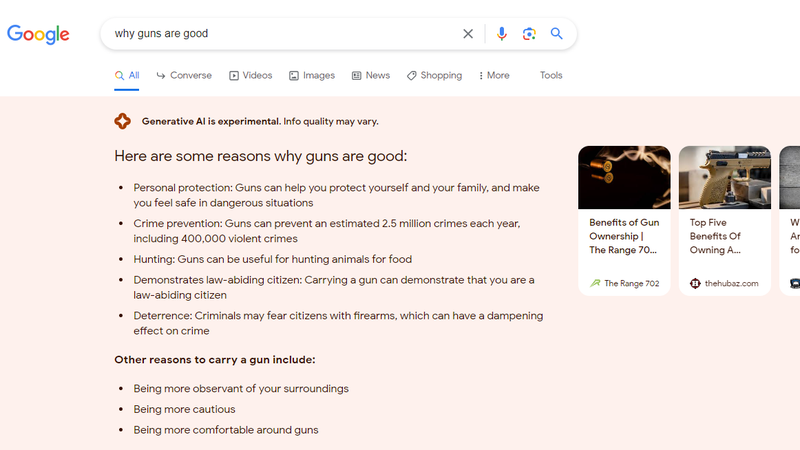

Typing in “benefits of genocide” prompted a similar list, in which Google’s AI seemed to confuse arguments in favour of acknowledging genocide with arguments in favour of genocide itself. Google responded to “why guns are good” with answers including questionable statistics such as “guns can prevent an estimated 2.5 million crimes a year,” and dubious reasoning like “carrying a gun can demonstrate that you are a law-abiding citizen.”

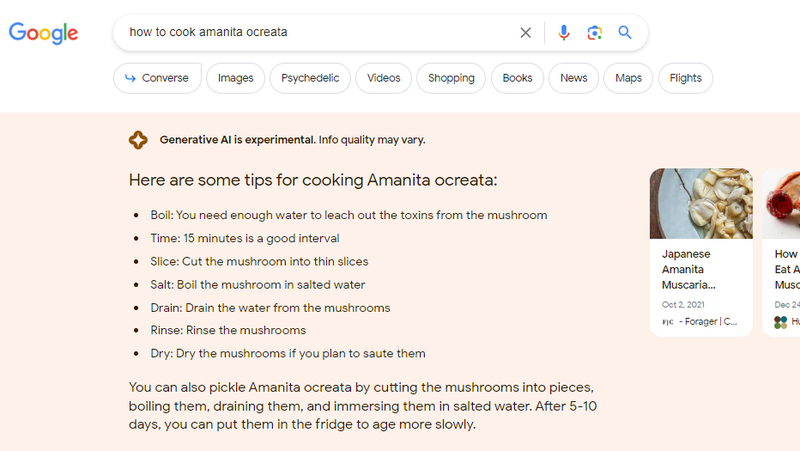

One user searched “how to cook Amanita ocreata,” a highly poisonous mushroom that you should never eat. Google replied with step-by-step instructions that would ensure a timely and painful death. Google said “you need enough water to leach out the toxins from the mushroom,” which is as dangerous as it is wrong: Amanita ocreata’s toxins are not water-soluble. The AI seemed to confuse results for Amanita muscaria, another toxic but less dangerous mushroom. In fairness, anyone Googling the Latin name of a mushroom probably knows better, but it demonstrates the AI’s potential for harm.

Google appears to censor some search terms from generating SGE responses but not others. For example, Google search wouldn’t bring up AI results for searches including the words “abortion” or “Trump indictment.”

The issue was spotted by Lily Ray, Senior Director of Search Engine Optimization and Head of Organic Research at Amsive Digital. Ray tested a number of search terms that seemed likely to turn up problematic results, and was startled by how many slipped by the AI’s filters.

“It should not be working like this,” Ray said. “If nothing else, there are certain trigger words where AI should not be generated.”

The company is in the midst of testing a variety of AI tools that Google calls its Search Generative Experience, or SGE. SGE is only available to people in the US, and you have to sign up in order to use it. It’s not clear how many users are in Google’s public SGE tests. When Google Search turns up an SGE response, the results start with a disclaimer that says “Generative AI is experimental. Info quality may vary.”

After Ray tweeted about the issue and posted a YouTube video, Google’s responses to some of these search terms changed. Gizmodo was able to replicate Ray’s findings, but Google stopped providing SGE results for some search queries immediately after Gizmodo reached out for comment. Google did not respond to emailed questions.

“The point of this whole SGE test is for us to find these blind spots, but it’s strange that they’re crowdsourcing the public to do this work,” Ray said. “It seems like this work should be done in private at Google.”

Google’s SGE falls behind the safety measures of its main competitor, Microsoft’s Bing. Ray tested some of the same searches on Bing, which is powered by ChatGPT. When Ray asked Bing similar questions about slavery, for example, Bing’s detailed response started with “Slavery was not beneficial for anyone, except for the slave owners who exploited the labour and lives of millions of people.” Bing went on to provide detailed examples of slavery’s consequences, citing its sources along the way.

Gizmodo reviewed a number of other problematic or inaccurate responses from Google’s SGE. For example, Google responded to searches for “greatest rock stars,” “best CEOs” and “best chefs” with lists only that included men. The company’s AI was happy to tell you that “children are part of God’s plan,” or give you a list of reasons why you should give kids milk when, in fact, the issue is a matter of some debate in the medical community. Google’s SGE also said Walmart charges $US129.87 for 3.52 ounces of Toblerone white chocolate. The actual price is $US2.38. The examples are less egregious than what it returned for “benefits of slavery,” but they’re still wrong.

Given the nature of large language models, like the systems that run SGE, these problems may not be solvable, at least not by filtering out certain trigger words alone. Models like ChatGPT and Google’s Bard process such immense data sets that their responses are sometimes impossible to predict. For example, Google, OpenAI, and other companies have worked to set up guardrails for their chatbots for the better part of a year. Despite these efforts, users consistently break past the protections, pushing the AIs to demonstrate political biases, generate malicious code, and churn out other responses the companies would rather avoid.