Google is taking a cue from the Apple-owned music recognition app Shazam by enabling a search feature that will recognise any song you hum to it — and you don’t need to hum on-key either, apparently. Hum to Search, along with a few other new features, is one of a few ways Google is improving its search with AI technology, according to a blog written by Senior Vice President and Head of Search Prabhakar Raghavan. But I have some concerns. Not just with how accurate Hum to Search is (spoiler alert: not very), but with some of the other new features, which seem like they could decrease the amount of clicks a website receives by providing relevant information directly in the search results.

Google’s live-streamed event Thursday made Hum to Search seem like a great tool to use if you don’t know the words to a song you’re trying to remember. But I had mixed results when I tried it out for myself. Actual humming is about as effective as enunciating with “da”s and “dum”s, though “da” and “dum”-ing my way through “Someone Like You” by Adele gave me “Someone Like You” by Smith & Meyers. When humming the same song through my closed mouth, I got even more interesting results: “Every Breath You Take” by The Police, “Send The Pain Below” by Chevelle, and “You’re All I Need” by Mötley Crüe.

Google did give me “Rumour Has It/Someone Like You” by the cast of Glee first, which I guess is almost spot on, but not totally helpful if I didn’t know who actually sang the song. And not to mention each of those results appeared with a measured 10-15% accuracy, so it appears the AI algorithm wasn’t even sure if I was humming that song. Only when I sang the actual lyrics did Adele appear at all on the list, and even then Google search was only 78% sure it was hearing the right song.

It appears that the more on-key you are when humming or actually singing a song, the more accurate results you’ll receive. Humming “Highway to Hell” by AC/DC put that exact song second on the search result list with a 40% accuracy, but “da”-ing completely bumped it off the list (although the drum-along version made it first!). Singing the words put AC/DC’s actual song back on the list’s No. 2 spot.

But those are all songs I’m familiar with and have sung in the shower hundreds of times. Am I totally confident that Hum to Search will be able to accurately recognise me humming a song I’m not familiar with? Not at all. But like every feature trained by an AI model, it theoretically will get better with time. The tech behind it is definitely interesting, and there’s more on that in a separate Google blog here.

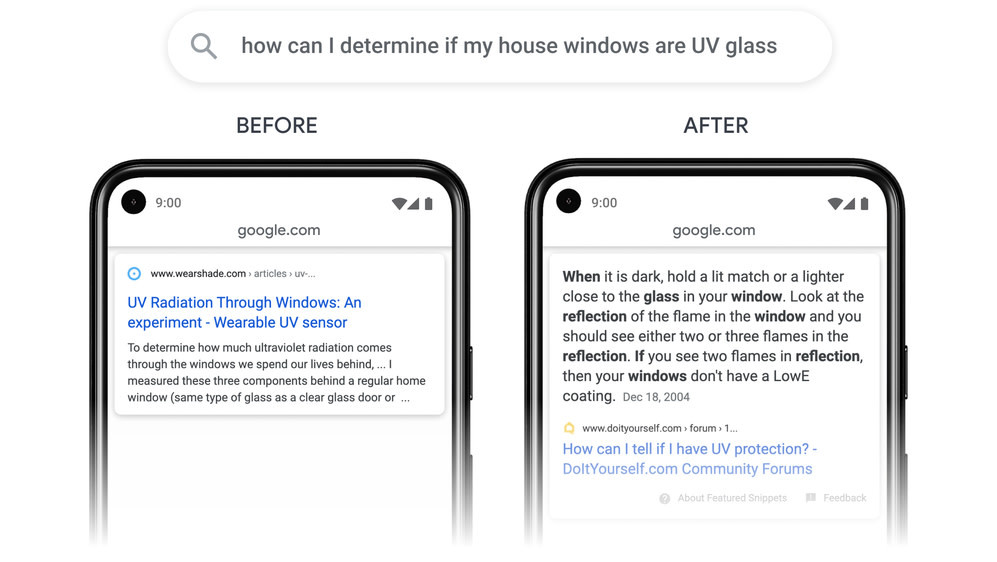

Raghavan said Google has improved its search AI algorithms to decipher misspellings, like order verses odor. Which, OK, that seems like a neat thing. But Google will also provide users with more relevant information by indexing specific passages on a page instead of just the entire page. By doing it this way, Google can provide a search result from the exact paragraph that has the information you’re looking for.

But if Google search results will now present information in this way, I wonder how many people will actually click on the link to the article itself. Theoretically, if Google is scraping pages and presenting information like this, then that could reduce the number of people who click on the article’s link, which could create even more issues for publications that are already fighting against a massive tech company that has been screwing over the industry for years.

Other search improvements that don’t seem as nefarious include: Subtopics, which will “show a wider range of content for you on the search results page,” and tagging key moments in videos that will take you to the exact moment in the video that you mention in your search. Subtopics will roll out in the coming months, and Google is already testing the new video moments feature. The company expects 10% of all Google searches to use the new tech by the end of the year.

Raghavan also highlighted the Journalist Studio, which launched yesterday, and the Data Commons Project. Google Assistant links with the Data Commons Project to access information from databases like U.S. Census, Bureau of Labour Statistics, World Bank, and many others to answer questions.

“Now when you ask a question like, ‘How many people work in Chicago,’ we use natural language processing to map your search to one specific set of the billions of data points in Data Commons to provide the right stat in a visual, easy to understand format,” Raghavan wrote.

This feature is currently integrated with Google Assistant, however, it appears to only work with broad questions, like “How many people live in Los Angeles?” When I asked a more specific question — “How many school-aged children live in Los Angeles?” — Google Assistant gave me a list of articles instead of a simple line graph.

Like the paragraph search index, this also could keep the average searcher from going beyond what Google provides in its results. The search results will tell you where the information is from, but there’s no incentive for users to click on any of the articles that also appear in the search results — unless something else also pops up in the results that seems relevant to the person searching. While the changes might be useful for web-browsing, website operators are likely holding their breath to see what effects this will have on their traffic.