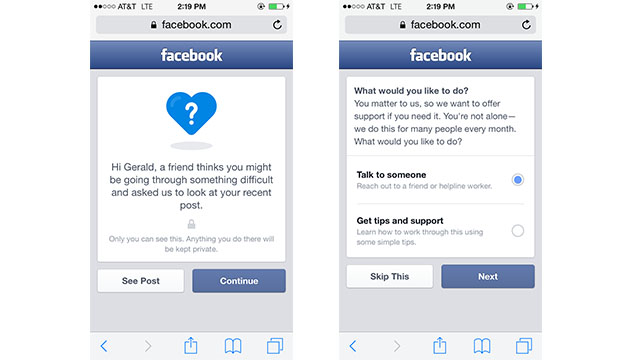

Facebook doesn’t exactly have the greatesttrackrecord when it comes to sensitivity, but its newest tool is thankfully bucking that trend. Soon, if you see a worrisome post from a friend and report it, Facebook will prompt them to get help on their next login — after a third party reviews it.

That last part is key, because while information on who to talk to and where to get help can be wildly helpful, there will always be those looking to abuse the system. But this way, any reports of troubling content will be checked out by Facebook’s “teams working around the world, 24/7,” which will hopefully keep trolls out. And, of course, the tools were designed with help from people who know what they’re doing. According to Facebook:

Besides encouraging them to connect with a mental health expert at the National Suicide Prevention Lifeline, we now also give them the option of reaching out to a friend, and provide tips and advice on how they can work through these feelings. All of these resources were created in conjunction with our clinical and academic partners.

The rollout will be happening in the US over the next few months, and Facebook says its’ working on improving its tools for those of us in other countries. If this helps even one person, it will be worth it. [Facebook via The Verge]

If you struggle with suicidal thoughts, please call the National Suicide Prevention Hotline: 1-800-273-8255.

Contact the author at ashley@gizmodo.com.