Computer scientists at Google Brain have devised a technique that tricks neural networks into misidentifying images — a hack that works on humans as well.

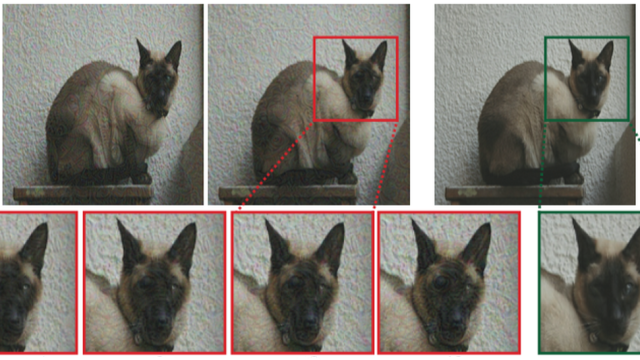

The new algorithm at work, turning a cat into something an AI — and humans — will perceive as a dog. Image: Google Brain

As Evan Ackerman reports at IEEE Spectrum, so-called “adversarial” images can be used to trick both humans and computers. The algorithm, developed by Google Brain, can tweak photos such that visual recognition systems can’t get them right, often misidentifying them as something else.

In tests, a deep convolutional network (CNN) — a tool used in machine learning to analyse and identify visual imagery — was fooled into thinking, for instance, that a picture of a cat is actually a dog.

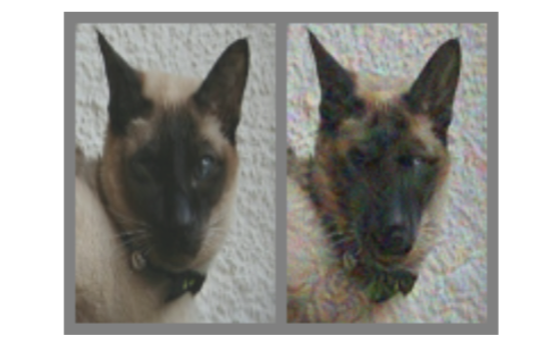

Left: Unmodified image of a cat. Right: A modified, “adversarial” image made to look like a dog. Image: Google Brain

Fascinatingly, humans were likewise tricked, a finding that suggests computer scientists are inching closer to developing systems that see the world just like us. More troublingly, however, it also means we’re about to get a whole lot better at tricking humans. The new study hasn’t been published yet, but it’s available at the arXiv preprint server.

CNNs are actually really easy to fool. Machine-based approaches to computer vision don’t analyse objects the way you and I do. AI looks for patterns by meticulously analysing each and every pixel in a photo, and studiously noting where the tiny dot sits within the larger image. It then matches the overall pattern to a pre-tagged, pre-learned object, like a photo of an elephant. Humans, on the other hand, take a more holistic approach.

To identify an elephant, we notice specific physical attributes, such as four legs, grey skin, large floppy ears, and a trunk. We’re also good at making sense of ambiguity, and extrapolating what might exist outside the border of the photograph. AI is still pretty hopeless at both of those things.

[referenced url=”https://gizmodo.com.au/2018/01/a-simple-sticker-tricked-neural-networks-into-classifying-anything-as-a-toaster/” thumb=”https://i.kinja-img.com/gawker-media/image/upload/t_ku-large/xkdou98mhurzyshsgyj0.png” title=”A Simple Sticker Tricked Neural Networks Into Classifying Anything As A Toaster” excerpt=”Image recognition technology may be sophisticated, but it is also easily duped. Researchers have fooled algorithms into confusing two skiers for a dog, a baseball for espresso, and a turtle for a rifle. But a new method of deceiving the machines is simple and far-reaching, involving just a humble sticker.”]

To give you an idea of how easy it is to fool artificial neural nets, a single misplaced pixel tricked an AI into thinking a turtle was a rifle in an experiment run by Japanese researchers last year. A few months ago, the Google Brain researchers who wrote the new study tricked an AI into thinking a banana was a toaster simply by placing a toaster-like sticker within the image.

Other tricks have fooled algorithms into confusing a pair of skiers for a dog, and a baseball for espresso.

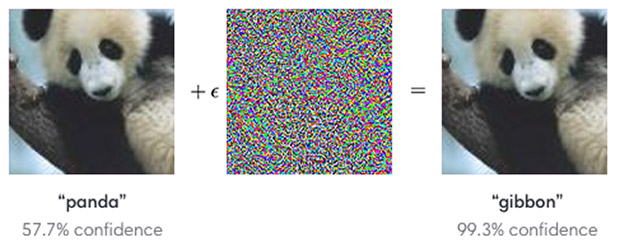

The way to mess with AI, as these examples illustrate, is to introduce a so-called “perturbation” within the image, whether it be a misplaced pixel, a toaster, or patterns of white noise that, while invisible to humans, can convince a bot into thinking a panda is a gibbon.

An unmodified image of panda (left), when mixed with a finely tuned “perburbation” (center), makes AIs think it’s a gibbon (right).Image: OpenAI/Google Brain

But these examples tend to involve a single image classifier, each of which learned from a separate dataset. In the new study, the Google Brain researchers sought to develop an algorithm that could produce adversarial images that’s capable of fooling multiple systems. Furthermore, the researchers wanted to know if an adversarial image that tricks an entire fleet of image classifiers could also trick humans. The answer, it now appears, is yes.

To do this, the researchers had to make their perturbations more “robust,” that is, create manipulations that can fool a wider array of systems, including humans. This required the addition of “human-meaningful features,” such as altering the edges of objects, enhancing edges by adjusting contrast, messing with the texture, and taking advantage of dark regions in a photo that can amplify the effect of a perturbation.

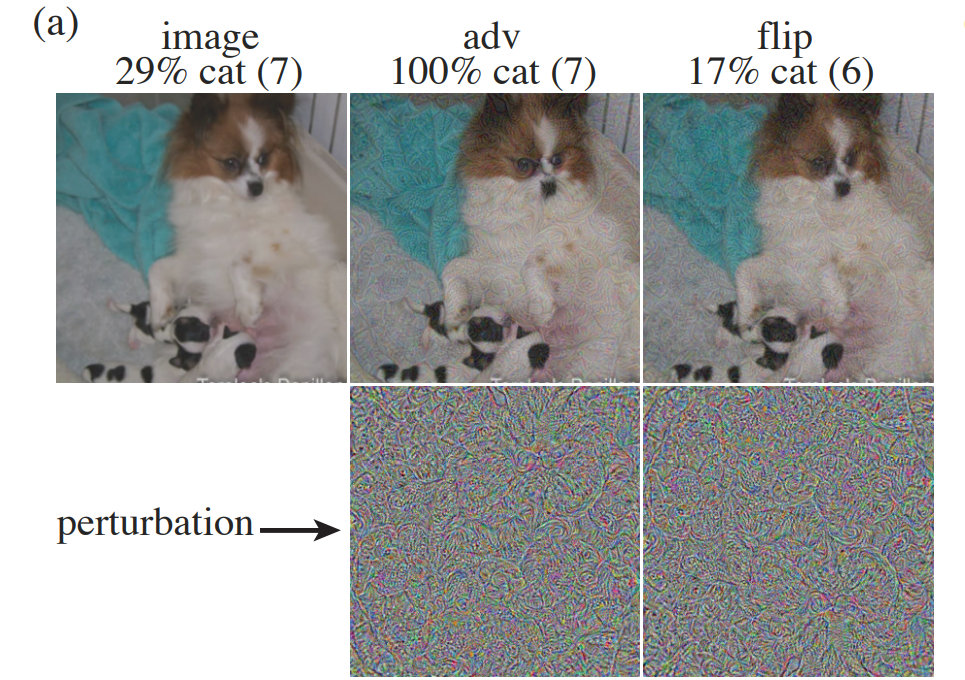

From left to right: Unmodified image of a dog, adversarial image in which the dog is made to look like a cat, and a control image where the perturbation is flipped.Image: Google Brain

In tests, researchers managed to develop an adversarial image generator that was able to create images that in some cases was able to fool 10 out of 10 CNN-based machine learning models. To test its effectiveness on humans, experiments were run in which participants were shown an unmodified photo, an adversarial photo that fooled 100 per cent of CNNs, and a photo with the perturbation layer flipped (the control).

Participants didn’t have much time to visually process the images, only between 60 to 70 milliseconds, after which time they were asked to identify the object in the photo. In one example, a dog was made to look like a cat — an adversarial image that was identified as a cat 100 per cent of the time. Overall, humans had a harder time distinguishing objects in adversarial images than in unmodified photos, which means these photo hacks may transfer well from machines to humans.

Tricking a human into thinking a dog is a cat by literally making the dog look like a cat may not seem profound, but it shows that scientists are getting closer to creating visual recognition systems that process images similarly to the way that humans do. Ultimately, this will result in superior image recognition systems, which is good.

More ominously, however, the production of modified or fake images, audio, and video is starting to become an area of growing concern.

The Google Brain researchers worry that adversarial images could eventually be used to generate so-called fake news, but to also subtly manipulate humans.

“For instance, an ensemble of deep models might be trained on human ratings of face trustworthiness,” write the authors. “It might then be possible to generate adversarial perturbations which enhance or reduce human impressions of trustworthiness, and those perturbed images might be used in news reports or political advertising.”

So a politician who’s running for office could use this technology to adjust their face in an TV ad that makes them appear more trustworthy to the viewer. Damn. It’s like subliminal advertising, but one that taps into the vulnerabilities and unconscious biases of the human brain.

The researchers also point out some happier-sounding possibilities, such as using these systems to make boring images look more appealing, such as air traffic control data or radiology images. Sure, but AI will make those jobs obsolete anyway. As Ackerman points out, “I’m much more worried about the whole hacking of how my brain perceives whether people are trustworthy or not, you know?”