Technologists like to put machine learning on a pedestal, exalting its ability to complement and even exceed human labour, but these systems are far from perfect. In fact, they are still tremendously vulnerable to self-owns. A research paper from January details how just how easy it is to trick an image recognition neural network.

“If you start from a firetruck, you just need to rotate it a little bit and it becomes a school bus with almost near-certain confidence,” Anh Nguyen, assistant professor of computer science at Auburn University and a researcher on the study, told Gizmodo in a phone call on Thursday.

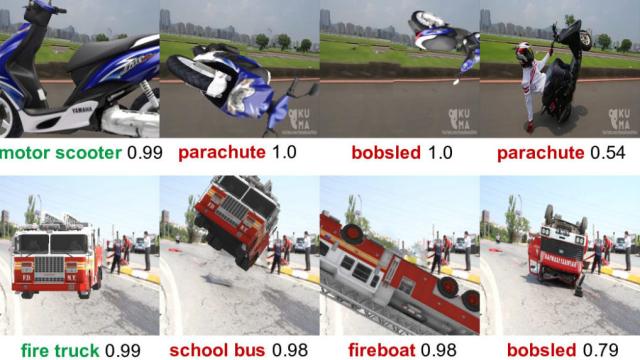

The paper, titled “Strike (with) a Pose: Neural Networks Are Easily Fooled by Strange Poses of Familiar Objects,” collected a dataset of 3D objects from ImageNet, rotated them, and then tested the image classification of a deep neural network. When the objects’ positions were slightly altered, the neural nets misclassified them 97 per cent of the time.

The researchers point out in the paper how this astonishing failure rate might have consequences offline, using self-driving cars as an example. They acknowledge that in the real world, “objects on roads may appear in an infinite variety of poses” and that self-driving cars need to be able to correctly identify objects that may appear in their path in order to “handle the situation gracefully and minimise damage.”

In other words, if the image recognition software of a self-driving car can’t identify a firetruck in its path because it’s positioned in a way that doesn’t match the image in its dataset, there are potentially deadly consequences for both parties.

Gizmodo spoke with Nguyen about his most recent paper as well as other work involving image recognition AIs and their ability to be fooled.

This interview has been edited for length and clarity.

Gizmodo: The self-driving car example is a really interesting way of understanding some of the more catastrophically harmful consequences. Is there a more mundane example that people may not realise this type of recognition applies to, where if it can’t recognise an object it’s not going to work effectively?

Nguyen: You can imagine the robots in the warehouse or the mobile home robots that look around and try to pick up the stuff or find keys for you. And these objects lying around can be in any pose in any orientation. They can be anywhere. You do not expect them to be in canonical poses and so they will be fooled by the adversarial poses.

That’s one, but you can also think of the TSA in airports and security. If you scan a bag of someone, the objects can be in any orientation, in any pose. You could also see this, for example, in the battlefield where people now have applied automated target recognition to the battlefield. Anything can happen in a battlefield, so you don’t expect things to be in canonical poses. There are many applications where this vulnerability will be a bigger problem.

Gizmodo: Were there any other examples of images that didn’t make it into the study?

Nguyen: There are many images. We generated way more than we can include, of course. I think the interesting cases can be divided into two types. One type is the firetruck into a school bus. What is interesting to me is you only need a small change. And now this is a school bus with very, very high confidence. You could also just change it by a few pixels and the prediction can be changed to some other classifier. That’s a sensitivity issue.

The second one is the taxi. It’s very funny, if you look at it through binoculars or all the way to the right, it’s a fork lift. These other poses that never existed in the training set, because humans never had a chance to capture them, but here through the simulation we can rotate the objects in any pose and identify them. These poses are never in the training set, so the computer never knows about them, but we humans can easily recognise it as a taxi.

Gizmodo: Zooming out a little bit to your previous work, can you list a few examples of other ways in which image recognition AIs were fooled? What were some of the more surprising examples that you’ve seen through your work?

Nguyen: You could look at the project “Deep Neural Network are Easily Fooled.” These were some of the most surprising at that time. We were able to generate a bunch of images that look just like TV static, like noise. However, the network is near-certainty confident that these are cheetahs, armadillos or pandas. That’s one surprising set of results. Another set is we were able to generate other types of garbage, images and patterns that look almost like nothing. But again they are classified as starfish, baseballs, electric guitars, and so on. Almost the opposite of the latest work.

There’s also those adversarial examples that look very similar to a real image, like if you take a real image you change a few pixels and now it’s misclassified as something else. It’s an iterative process. In every iteration we try to change a few pixels in the direction of increasing the network’s confidence that it is something else. So by iteratively changing by pixel, we will reach a point that this image becomes highly classified as a banana. But every iteration we only change a few pixels.

Gizmodo: So it’s classified that way, what does it look like to the human eye?

Nguyen: We could change this to be very small, such that the image looks just like the original image, the modified one looks just like the original image, and that’s a very fascinating thing about the vulnerability. So if you have a school bus image, you can change a pixel, a pixel, a pixel until it’s misclassified as a banana but then the modified one looks just like the school bus.

Gizmodo: Is there a solution yet to this issue, or is it kind of relying on more research into these image recognition systems?

Nguyen: It depends on what we want to do. If we want to, let’s say, have reliable self-driving cars, then the current solution is to add more sensors to it. And actually you rely on these set of sensors rather than just images so that’s the current solution. If you want to solve this vision problem, just prediction based on images, then there’s no general solution. A quick and dirty hack nowadays is to add more data, and in the model world naturally they become more and more reliable, but then it comes at a cost of a lot of data, millions of data points.

Gizmodo: This was an interesting take, to slightly rotate an object and something is misclassified. Is there another way that you’re looking into how objects or images are manipulated that might fool AIs?

Nguyen: In terms of fooling, this is our latest work. We are more interested now in what would be the fix. Because the latest work already shows that you take an object and you find a small change, when you rotate it, and it fools a neural net. That is already arguably the simplest way to fool and it shows how brittle the networks are. We are more looking into how to fix it.