Microsoft has a lot riding on AI, so much that it’s reportedly developing its own superpowered chip designed specifically for training and running its sophisticated chatbot systems. This chip, code-named “Athena,” would need Olympus-sized power to channel all of Microsoft’s ambitions in the AI arena.

On Tuesday, The Information reported based on two anonymous sources with direct knowledge of the project that Microsoft’s upcoming Athena chip has been in the works since 2019. That year also happens to be the same time the Redmond, Washington tech giant made its first investment into OpenAI, the makers of ChatGPT and GPT-4. Per the report, the chip is being tested behind the scenes by a small number of Microsoft and OpenAI staff. The chip is reportedly designed to handle both training and running its AI systems (which means it’s intended Microsoft’s internal setups, rather than your personal PC).

Gizmodo reached out to Microsoft, which declined comment. Of course, it makes sense that the Redmond company is trying to develop its own proprietary tech to handle its growing AI ambitions. Ever since it added a ChatGPT-like interface into its Bing app, the company has worked to install a large language model-based chatbot into everything from its 365 apps to Windows 11 itself.

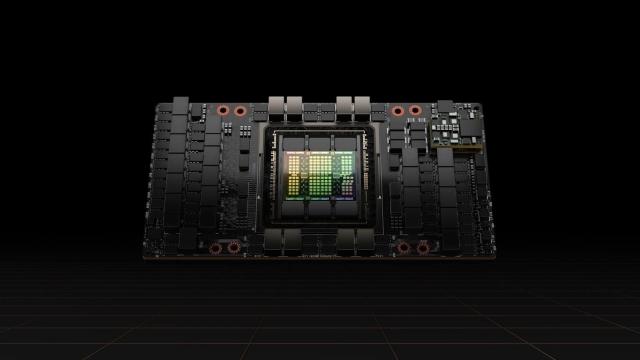

Microsoft is trying to cut down on the money it’s paying other AI chip makers. Nvidia is the biggest player in this arena, with its $US10,000 A100 chip taking up more than 90% of the datacenter GPU market for both training and running AI, according to investor Nathan Benaich in his State of AI report from last October.

Now Nvidia has a more advanced chip, the H100 chip, which is supposed to grant nine times as much training performance as its previous AI training chip, according to the chipmaker. Nvidia claimed last month that OpenAI and Stable Diffusion-maker Stability AI each used a H100 in part to train and run their current-gen AI models. Thing is, that H100 isn’t cheap. As first reported by CNBC, that newer GPU has gone for upwards of $US40,000 on eBay, when it has cost closer to $US36,000 in the past.

Nvidia H100 GPUs going for $40k on eBay. pic.twitter.com/7NOBI8cn3k

— John Carmack (@ID_AA_Carmack) April 14, 2023

Nvidia declined to comment on Microsoft’s plans to stop relying on the company’s tech. Though Nvidia maintains the biggest lead in AI training chips, the company does probably still want to keep Microsoft as a customer. Last year, the U.S. introduced new restrictions to keep the company from sending its A100 and H100 chips to Russia and China. Last month, Nvidia said it was allowing for more cloud-based access to its H100 chips, and that Meta was also jumping on the H100 bandwagon.

Competition and costs are reportedly speeding up Athena’s development. Google, the other major tech giant trying to make a statement in the burgeoning AI industry, is also working on its own AI chips. Earlier this month, the company offered more details on its own Tensor Processing Unit supercomputers. The company said it connected several thousand of these chips together to make a machine learning supercomputer, and that this system was used to train its PaLM model, which in turn was used to create its Bard AI.

Google even claimed its chips use two to six times less energy and produce approximately 20 times less CO2 than “contemporary DSAs.” Knowing just how much energy it takes to train and run these AI models, Microsoft’s new chip will need to contend with the massive environmental cost of proliferated AI.