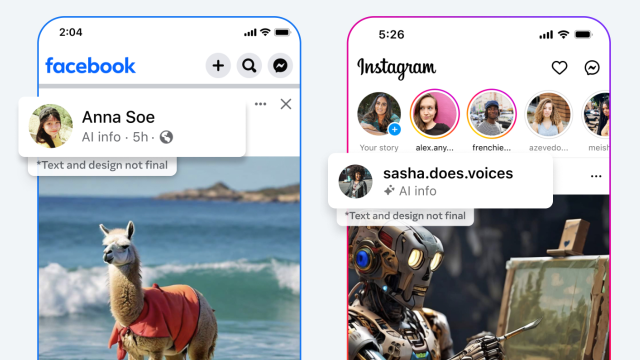

Meta will be rolling out labels and watermarks for AI images posted on its platforms (Facebook, Instagram, and Threads), and noted that any AI-generated audio or videos would need a user-applied label, or face penalties on the platform.

While this is a welcome move that will certainly help to stop the spread of misinformation on social media platforms, watermarks are not enough to fully address AI’s social impacts – especially among state actors, according to Techinnocens founder Matthew Newman.

Newman’s organisation works with governments and enterprises on how to responsibly and ethically utilise AI, and while he was happy to see this move from Meta, he was quick to note that it may not be enough.

“If you’ve got state actors for example, or someone with real, serious intent and resources, this [the changes] is probably not going to stop them. They’ve probably developed their own models, if they’re really that level, or they might use open source to circumvent.

“The situation then is – if we blindly trust those labels, that makes us kind of vulnerable.”

AI watermarking with a focus on consumers

Meta’s labelling is very honed in on consumers, so the warning could target the odd post that someone might put up in a group, which they claim is real, but was whipped together in a generator like Midjourney. You may have been misled by an AI-generated image at some point on social media over the past year or so – I certainly have. It can often be difficult to tell.

Samsung recently rolled out similar watermarking tools in tandem with its new AI features on the Galaxy S24 range – bodies of text have a label that appears at the footer of the prompt box, while images have a transparent tag in the corner and a tag in the metadata. While these features might make it easy to cut the bullshit, these aren’t perfect – and can easily be removed.

“Until AI is officially regulated, each of the companies [that Samsung is working with], including Samsung, we are taking a forward-looking stance around ‘how do we ensure the protection of what is original, versus what is AI’,” Samsung Australia’s head of mobile Eric Chou told Gizmodo Australia in January. Chou added that Samsung would happily comply with any regulation that is introduced around AI, including to do with watermarks.

Watermarking is essential for combatting the spread of AI-generated misinformation, but for the most part, it’s been presented as a consumer-oriented solution. AI content created by a bad actor doesn’t necessarily need to worry about it, especially if they’re using a generator trained without watermarking that can slip past any detection.

While these two companies are addressing something fundamentally essential in the AI space, Meta has a much bigger issue on its hands – enforcement. While the Galaxy AI suite can certainly be used maliciously, Samsung doesn’t have to worry so much about, for example, an AI-generated image of the Eiffel Tower going viral on its platforms – but Meta very much does.

How do you enforce a label on social media?

“We’ll require people to use [a] disclosure and label tool when they post organic content with a photorealistic video or realistic-sounding audio that was digitally created or altered, and we may apply penalties if they fail to do so,” Meta wrote in a post announcing the new AI labelling initiative.

“If we determine that digitally created or altered image, video or audio content creates a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label if appropriate, so people have more information and context.”

And that’s all very important to do, particularly as the U.S. heads into this year’s federal election, but the very nature of Meta’s platforms appears antithetical to these strategies, at least according to Matthew Newman.

“These platforms exist for reposting,” Newman said. “The reach on these platforms isn’t having the original post, it’s getting people to repost it, and repost it. If you’ve got data that isn’t watermarked, the threats of penalties only work if the person knowingly does so.”

Meta’s ability to detect AI-generated content on its own is only as strong as its collaboration with companies like Microsoft, Google, OpenAI, Adobe, Midjourney, and Shutterstock, which have each implemented metadata tags on images created with their programs.

Meta might be able to detect AI-generated images with watermarks built out of the C2PA (Coalition for Content Provenance and Authenticity) and IPTC (International Press Communications Council), but open-source and independently created image generators may not be as easy to detect if they’re not leaning on established standards.

Meta claims that companies are yet to start including markers in AI-generated videos and audio that are easily identifiable, which makes things all the more difficult because it’s often hard to tell if the text has been AI-generated, though Newman believes the vast majority of AI-generated misinformation tends to come from this.

“I don’t think we’ll ever get back to the point where we can actually put this completely back in the box… … Most of the fraud and deception uses quite cheap technology, like phishing emails. It’s people sending out mail saying ‘I need someone to store this inherited fortune’ or whatever. That’s just text; if we make it kind of more challenging and costly to deceive with fake media … then we keep it slightly more in the box, by making the cost of these more sophisticated methods unappealing to fraudsters.”

Image: Meta