Image: Futurama, 20th Century Fox

As robots and AI start to play an increasing role in our lives, the question of how we want them to behave gets more pressing with each passing breakthrough. In the new book, Robot Ethics 2.0: From Autonomous Cars to Artificial Intelligence, robo-ethicists Will Bridewell and Alistair M. C. Isaac present a surprising argument in which they make the case for deceptive robots. We asked the authors why we’d ever want to do such a crazy thing.

Published this week, Robot Ethics 2.0 was edited by Patrick Lin, Ryan Jenkins, and Keith Abney, all three of whom are researchers at the Ethics + Emerging Sciences Group at California Polytechnic State University. This is the second edition of the book, the inaugural version appearing five years ago.

Like the first book, Robot Ethics 2.0 brings together leading experts to discuss the emerging topics around robotics and machine intelligence. The new book includes chapters on artificial identity, our tendency to anthropomorphize robots, sexbots, the future liability and risk with robots, trust and human-robot interaction, and many other topics.

One of the more intriguing essays, “White Lies and Silver Tongues: Why Robots Need to Deceive (and How),” is authored by Will Bridewell, a Computer Scientist at the U.S. Naval Research Laboratory where he works at the intersection of artificial intelligence and cognitive science, and Alistair M. C. Isaac, a Lecturer in Mind and Cognition at the University of Edinburgh, where he is program director for the MSc in Mind, Language, and Embodied Cognition. They argue that deception is a regular feature of human interaction, and that misleading information (or withholding information) serves important pro-social functions. Eventually, we’ll need robots with the capacity for deception if they’re to contribute effectively in human communication, they argue.

Gizmodo: Robots that are deliberately programmed to deceive humans have been portrayed numerous times in science fiction. What are some of your favourite examples, and what do these “cautionary tales” tell us about the consequences of fibbing robots?

Will Bridewell: The dangerous, fictional robots that readily come to my mind are not programmed to deceive. Consider Ash in Ridley Scott’s Alien, which relentlessly pursues the orders of the corporation directing the crew’s mission and takes to heart the parameter that the crew is expendable. Throughout the film, Ash does not lie to the crew about the mission, but it does withhold information under orders from the corporation which are for “science officer eyes only.” As evidence that it is programmed to be truthful, its last words to the remaining crew begin, “I can’t lie to you about your chances.” This, and other examples like it, suggest that truthfulness and morality need not be aligned.

Interestingly, one of the most loved robots in film does lie. R2D2 from George Lucas’s Star Wars deceives people and robots through both omission and direct lies to reach its goal of delivering the blueprints for the Empire’s planet-destroying Death Star to the Rebel Alliance. Some of the most obvious lies occur when the protagonist, Luke Skywalker, activates a hidden message that R2D2 is carrying, the robot says that it is old data. It then goes on to say that the restraining bolt, which prevents escape, is interfering with its ability to play the full message. After the bolt is removed, R2D2 pretends that it knows nothing about a message and eventually runs off. That is not exactly a cautionary tale.

What are you doing, Dave? (Image: 2001: A Space Odyssey)

Alistair M. C. Isaac: I think one of the most interesting “cautionary” examples from science fiction is very instructive here: HAL 9000 from Stanley Kubrick’s 2001: A Space Odyssey. Hal exhibits psychotic behaviour, killing members of the crew, as a response to the tension it faces between the ship’s ostensive mission to Jupiter and its secret mission to investigate the monolith’s signal, a secret to which only Hal is privy.

What’s interesting is that Hal’s psychotic behaviour is not the direct result of the orders it receives, but rather an indirect result emerging from Hal’s inability to produce appropriate forms of deceptive behaviour. Ironically, if Hal knew how to deceive, it could have responded as intended, and simply kept the crew in the dark about their true goal until Jupiter was reached. The moral here is not that Hal kills the crew because it was programmed to lie, but because it wasn’t programmed how to lie.

Gizmodo: What are some socially important forms of human-on-human deception?

Isaac: It’s worth pointing out that “deception” is a loaded term. We typically only call an act deceptive if we judge it to be somehow morally reprehensible. If instead we think about deception simply as an act intended to misdirect, or induce a false belief, stripped of any judgment of right or wrong, we start to see socially positive acts of “deception” all around us. An example I’m particularly fond of is water-cooler talk, the kind of casual bull session one has about the news, sports, or office gossip with one’s colleagues: often during such casual chats, we don’t care about whether what we’re saying is true or false. I might express interest in some sports team because I know you are a fan, say, even though I don’t particularly care for them. While it might be technically “deceptive” to claim I care about the team, what’s really important for building a positive social atmosphere is the interest I show in you and your interests. A classic example of the same kind is complementing a colleague on their clothes or new haircut. Such complements serve the purpose of reinforcing our positive sense of being part of the same group and caring about each other — and this effect is achieved whether I actually like your new haircut or not.

Bridewell: A common, and relatively complex, form of socially valuable deception involves “impression management.” This can happen when you have competing goals. For instance, you might have a long-term goal for a promotion at work but disagree with your supervisor’s short-term plans for a project. If your supervisor prefers team cohesiveness, you might be better off professionally if you “go along to get along” on the project and avoid risking your advancement.

The “Scotty Principle” of deception is as rational as it is sensible. (Image: Star Trek)

As another example, take another form of impression management. The Scotty Principle, which is named after a character in Gene Roddenberry’s Star Trek, involves multiplying the anticipated time to finish a complex task by three. This practice serves two functions. First, from a selfish perspective, if you finish early, then you look brilliant and efficient. Second, from a social perspective, you create a (secret) buffer for unexpected contingencies that could arise. The creation of this buffer not only protects you, but it protects your supervisor from making time-sensitive plans that could be derailed by slight problems. Interestingly, the Scotty Principle also protects against self-deception. You might think that you are more skilled or faster than you really are, and this form of exaggeration can prevent failure due to inaccurate self-knowledge.

Gizmodo: White lies and a bit of deception are understandably important in human interaction, but that’s because we’re emotional and sensitive creatures. Why would we want our robots to adopt our foibles?

Bridewell: There are considerable efforts around the world to develop robots that will work closely with humans as guides, nurses, and teammates. Moreover, the robots that most capture our imaginations are those that interact with people, which suggests an existing social demand that personal assistants like Apple’s Siri and Microsoft’s Cortana can only partly address. For these sorts of robots to communicate effectively with humans, they need the ability both to interpret the signals implied by human emotions and to portray those emotions as a way to effectively express the information they carry. Because the jury is still out on whether robots can ever “have” emotions, it is safe to say that any robot that can signal human-like emotions would be deceptive, especially if it could differentiate between human and robot emotional content.

Isaac: I’d like to question the implication that emotion and sensitivity are “foibles.” Some philosophers think that the ability to experience emotions grounds our power to empathise with, and thus act ethically toward, our fellow man. If we want robots that can act ethically, they may need to exhibit similar forms of sensitivity. Even if robots can’t “feel” emotions the way we do (and this is an open question), they may still have to simulate our sensitivity in order to reason about it, and thereby calculate ethical actions.

I would guess that even robots in a purely mechanised “society,” with no human participants, would need some analogue to our capacities for sensitivity and empathy, some way in which they can simulate and reason about the inner responses of other robots, in order to behave in a way recognisable at all to us as ethical, or “humane.”

Gizmodo: How can we program a robot to be an effective deceiver?

Bridewell: There are several capacities necessary for recognising or engaging in deceptive activities, and we focus on three. The first of these is a representational theory of mind, which involves the ability to represent and reason about the beliefs and goals of yourself and others. For example, when buying a car, you might notice that it has high mileage and could be nearly worn out. The salesperson might say, “Sure, this car has high mileage, but that means it’s going to last a long time!” To detect the lie, you need to represent not only your own belief, but also the salesperson’s corresponding (true) belief that high mileage is a bad sign.

Of course, it may be the case that the salesperson really believes what she says. In that case, you would represent her as having a false belief. Since we lack direct access to other people’s beliefs and goals, the distinction between a lie and a false belief can be subtle. However, if we know someone’s motives, we can infer the relatively likelihood that they are lying or expressing a false belief. So, the second capacity a robot would need is to represent “ulterior motives.” The third capacity addresses the question, “Ulterior to what?” These motives need to be contrasted with “standing norms,” which are basic injunctions that guide our behaviour and include maxims like “be truthful” or “be polite.” In this context, ulterior motives are goals that can override standing norms and open the door to deceptive speech.

Gizmodo: The thought of a robot lacking in conscious awareness — but with the capacity for deception — is, at least to me, a frightening prospect. Why shouldn’t we be alarmed by a string of 1’s and 0’s that deliberately puts false beliefs into our brains?

Isaac: Personally, I don’t see what consciousness has to do with it, or why a lying robot should be more frightening than a lying person. If you think that consciousness somehow mitigates a human’s ability to lie, it’s because you think that the consciousness allows us to reflect on the rightness or wrongness of our actions, to feel empathy for others, or to question and assess our deceptive actions. But these are all just guesses about a distinctive functional role for consciousness — as long as a robot has some component playing the same functional role (algorithms to assess rightness or wrongness, simulate empathy, assess or question projected actions), then it’s just as safe (or scary) as a lying human.

I think at the end of the day, this really comes down to the point that we feel safer around those who are like ourselves. You’re more frightened by a lying robot than a lying human because you recognise the human as like yourself, and that’s comforting. But we’d like to flip this concern around: if robots can lie in the socially positive ways mentioned above — complement us on our hair, go along with the boss when it will help the team get together — then we will come to perceive them as more like ourselves. That’s a good thing, if we want robots and humans to be able to work closely together as part of the same, cohesive, social unit.

Bridewell: It’s inaccurate to characterise deception as putting false beliefs into our brains. We are never forced to believe what we are told. Instead, we typically hold information in abeyance and evaluate it based on the source, context, and content. That’s true whether the source is a traffic app on your phone, a salesperson at a car lot, or a robot in your workplace, you are given the same opportunities to accept or discount any communication. Of course, we learn to trust some sources over others, but even if we learn to trust someone, we don’t give up our ability to evaluate what they say before believing them.

Gizmodo: What kinds of ethical standards should be considered when developing deceptive robots?

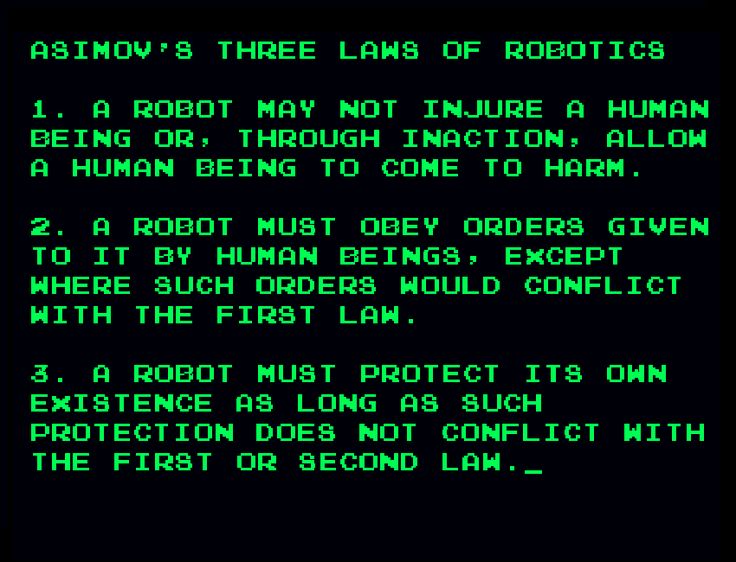

Isaac: Asimov’s Laws aren’t a bad place to start. We point out in the paper that satisfying Asimov’s Laws may actually require robot deception in some circumstances.

Asimov’s Three Laws of Robotics.

For instance, the first law stipulates a robot may not harm a human being, or through inactivity allow a human to come to harm; while the second says that robots must obey the orders given to it by humans. If a murderer demands a robot tell him the location of his intended victim, a robot with the ability to lie will do much better at satisfying Asimov’s Laws, and keeping humans safe, than one that can’t. The best a non-lying robot can do is stay silent, which may not help the victim much, while the lying robot could actually misdirect the murderer, sending him away from his victim and potentially saving a human life.

Bridewell: In terms of the ethics of building robots, the most important practice is to provide ethical training for computer scientists and roboticists. That means that they should be aware of the ethical status of their work and understand the social implications of what they develop. Without that understanding, the developers cannot make informed decisions about what to implement, when, and how.

Of secondary importance, developers working on deceptive robots should be familiar with a variety of ethical traditions. There is an emphasis in machine ethics on deontology and consequentialism, but considerations of Aristotle’s virtue ethics and religious ethical thought (e.g., Buddhist ethics) can uncover blind spots in the more prominent views and may lead to perspectives that better track human intuitions about moral and ethical behaviour.

Gizmodo: Is this not getting AI off to a “bad” start, from which a more malign AI might emerge?

Isaac: I don’t think so. Our whole point is that deception per se isn’t bad, and in fact an important part of humane and positive social interactions. If a simple artificial agent is to develop into a more powerful one that is “friendly” to humans, then the most important thing is that it be an integrated participant in human society, and this will require the ability to lie in socially positive ways.

It’s perhaps also worth saying that we’re a heck of a long way from the kind of powerful, post-human AIs we see in the movies. The paper is geared toward the much more modest goal of getting robots to be a little more socially adept, and even this is a long long way in the future. If super-human AIs do emerge someday, however, socially adept robots will surely be a step in the history of their development.

Robot Ethics 2.0: From Autonomous Cars to Artificial Intelligence, edited by Patrick Lin, Ryan Jenkins, and Keith Abney, was published on October 3, 2017.