Have you ever ignored a seemingly random LinkedIn solicitor and been left with a weird feeling that something about the profile just seemed…off? Well, it turns out, in some cases, those sales reps hounding you might not actually be human beings at all. Yes, AI-generated deepfakes have come for LinkedIn and they’d like to connect.

That’s according to recent research Renée DiResta of the Stanford Internet Observatory detailed in a recent NPR report. DiResta, who made a name for herself trudging through torrents of Russian disinformation content in the wake of the 2016 election, said she became aware of a seeming phenomenon of fake, AI computer-generated LinkedIn profile images after one particularly strange-looking account tried to connect with her. The user, who reportedly tried to pitch DiResta on some unimportant piece of software, used an image with strange incongruities that stood out to her as odd for a corporate photo. Most notably, DiResta says she noticed the figures’ eyes were aligned perfectly in the middle of the image, a tell-tale sign of AI generated images. Always look at the eyes, fellow humans.

“The face jumped out at me as being fake,” DiResta told NPR.

From there, DiResta and her Stanford colleague Josh Goldstein conducted an investigation that turned up over 1,000 LinkedIn accounts using images that they say appear to have been created by a computer. Though much of the public conversation around deep fakes has warned of the technology’s dangerous potential for political misinformation, DiResta said the images, in this case, seem overwhelmingly designed to function more like sales and scam lackeys. Companies reportedly use the fake images to game LinkedIn’s system, creating alternate accounts to send out sales pitches to avoid running up against LinkedIn’s limits on messages, NPR notes.

The face looked AI generated, so my first thought was spearphishing; it’d sent a “click here to set up a meeting” link. I wondered if it was pretending to work for the company it claimed to represent, since LNKD doesn’t tell companies when new accounts claim to work somewhere.

— Renee DiResta (@noUpside) March 28, 2022

“It’s not a story of mis- or disinfo, but rather the intersection of a fairly mundane business use case w/AI technology, and resulting questions of ethics & expectations,” DiResta wrote in a Tweet.” “What are our assumptions when we encounter others on social networks? What actions cross the line to manipulation?”

LinkedIn did not immediately respond to Gizmodo’s request for comment but told NPR it had investigated and removed accounts that violated its policies around using fake images.

“Our policies make it clear that every LinkedIn profile must represent a real person,” a LinkedIn spokesperson told NPR. “We are constantly updating our technical defences to better identify fake profiles and remove them from our community, as we have in this case.”

Deepfake Creators: Where’s The Misinformation Hellscape We Were Promised?

Misinformation experts and political commentators forewarned a type of deepfake dystopia for years, but the real-world results have, for now at least, been less impressive. The internet was briefly enraptured last year with this fake TikTok video featuring someone pretending to be Tom Cruise, though many users were able to spot the non-humanness of it right away. This, and other popular deep fakes (like this one supposedly starring Jim Carey in The Shining, or this one depicting an office full of Michael Scott clones) feature clearly satirical and relatively innocuous content that don’t quite sound the, “Danger to Democracy” alarm.

Other recent cases however have tried to delve into the political morass. Previous videos, for example, have demonstrated how creators were able to manipulate a video of former President Barack Obama to say sentences he never actually uttered. Then, earlier this month, a fake video pretending to show Ukrainian president Volodymyr Zelenskyy surrendering made its rounds through social media. Again though, it’s worth pointing out this one looked like shit. See for yourself.

A deepfake of Ukrainian President Volodymyr Zelensky calling on his soldiers to lay down their weapons was reportedly uploaded to a hacked Ukrainian news website today, per @Shayan86 pic.twitter.com/tXLrYECGY4

— Mikael Thalen (@MikaelThalen) March 16, 2022

Deepfakes, even of the political bent, are definitely here, but concerns of society stunting images have not yet come to pass, an apparent bummer leaving some post-U.S. election commentators to ask, “Where Are the Deepfakes in This Presidential Election?”

Humans Are Getting Worse At Spotting Deepfake Images

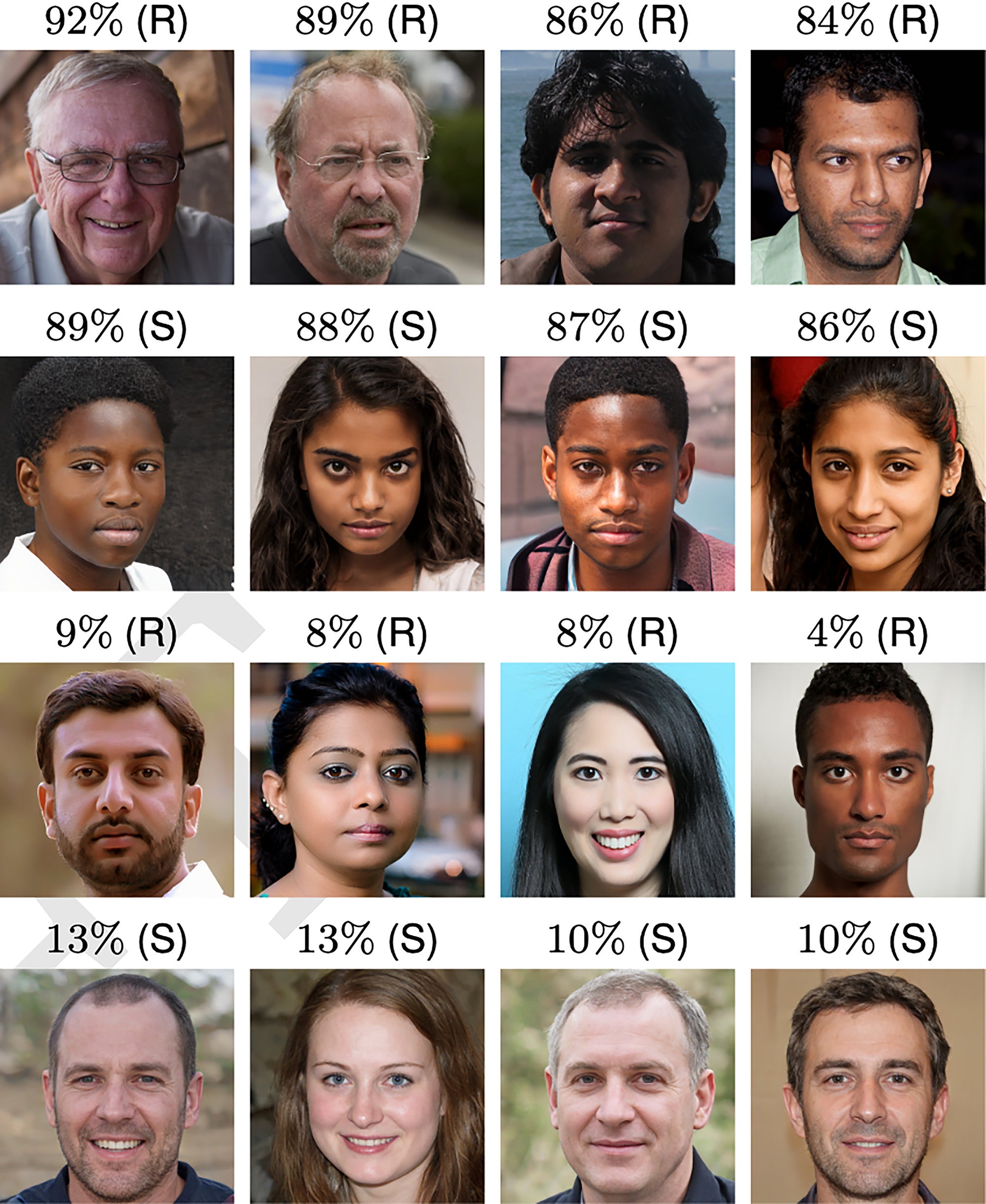

Still, there’s a good reason to believe all that could change…eventually. A recent study published in the Proceedings of the National Academy of Sciences found computer-generated (or “synthesized”) faces were actually deemed more trustworthy than headshots of real people. For the study, researchers gathered 400 real faces and generated another 400, extremely lifelike headshots using neural networks. The researchers used 128 of these images and tested a group of participants to see if they could tell the difference between a real image and a fake. A separate group of respondents were asked to judge how trustworthy they viewed the faces without hinting that some of the images were not human at all.

The results don’t bode well for Team Human. In the first test, participants were only able to correctly identify whether an image was real or computer generated 48.2% of the time. The group rating trustworthiness, meanwhile, gave the AI faces a higher trustworthiness score (4.82) than the human faces (4.48.)

“Easy Access to such high-quality fake imagery has led and will continue to lead to various problems, including more convincing online fake profiles and — as synthetic audio and video generation continues to improve — problems of nonconsensual intimate imagery, fraud, and disinformation campaigns,” the researchers wrote. “We, therefore, encourage those developing these technologies to consider whether the associated risks are greater than their benefits.”

Those results are worth taking seriously and do raise the possibility of some meaningful public uncertainty around deepfakes that risks opening up a pandora’s box of complicated new questions around authenticity, copyright, political misinformation, and big “T” truth in the years and decades to come.

In the near term though, the most significant sources of politically problematic content may not necessarily come from highly advanced, AI driven deepfakes at all, but rather from simpler so-called “cheap fakes” that can manipulate media with far less sophisticated software, or none at all. Examples of these include a 2019 viral video exposing a supposedly hammered Nancy Pelosi slurring her words (that video was actually just slowed down by 25%) and this one of a would-be bumbling Joe Biden trying to sell Americans car insurance. That case was actually just a man poorly impersonating the president’s voice dubbed over the actual video. While those are wildly less sexy than some deepfake of the Trump pee tape, they both gained massive amounts of attention online.