Google wants everyone to know that it’s indeed using its unprecedented access to the world’s ever-growing data to build out powerful new AI tools. But while other newcomers to the field are releasing products at an astounding clip, Google’s past battle scars with ethicists and regulators is forcing them to take a more cautious approach.

Google unveiled a handful of these new AI tools and use cases during its AI@ event in New York City on Wednesday. The company highlighted a variety of different approaches ranging from purely practical to weirdly whimsical. Throughout the presentation, Googlers attempted to balance their desires for rapid technological advancement while simultaneously emphasising an interest in having ethical considerations “baked-in” to its technological advancements, something critics argue it and the tech industry as a whole have, until recently, utterly failed to properly address.

Google Will Let You Experiment With Its AI Image Generator…But Barely

2022 brought about wild advancements in generative AI applications thanks to a groundswell of text-to-image AI models from OpenAI’, Stable Diffusion, and others, which collectively forced everyday users to re-imagine the possibilities and limitations of computer-generated content. Though Google has spent years and resources developing similar tech through its “Imagen” model, the world’s largest search engine took a cautious approach and, until now, refrained from opening its model up to the public.

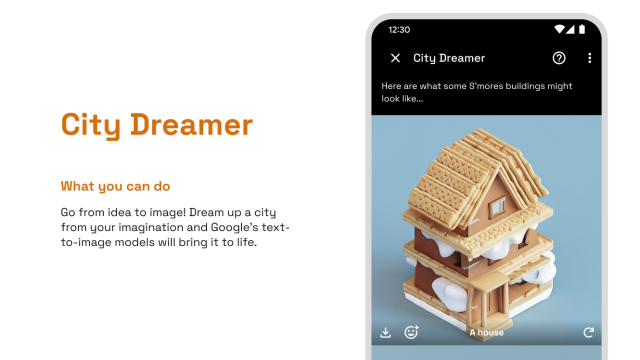

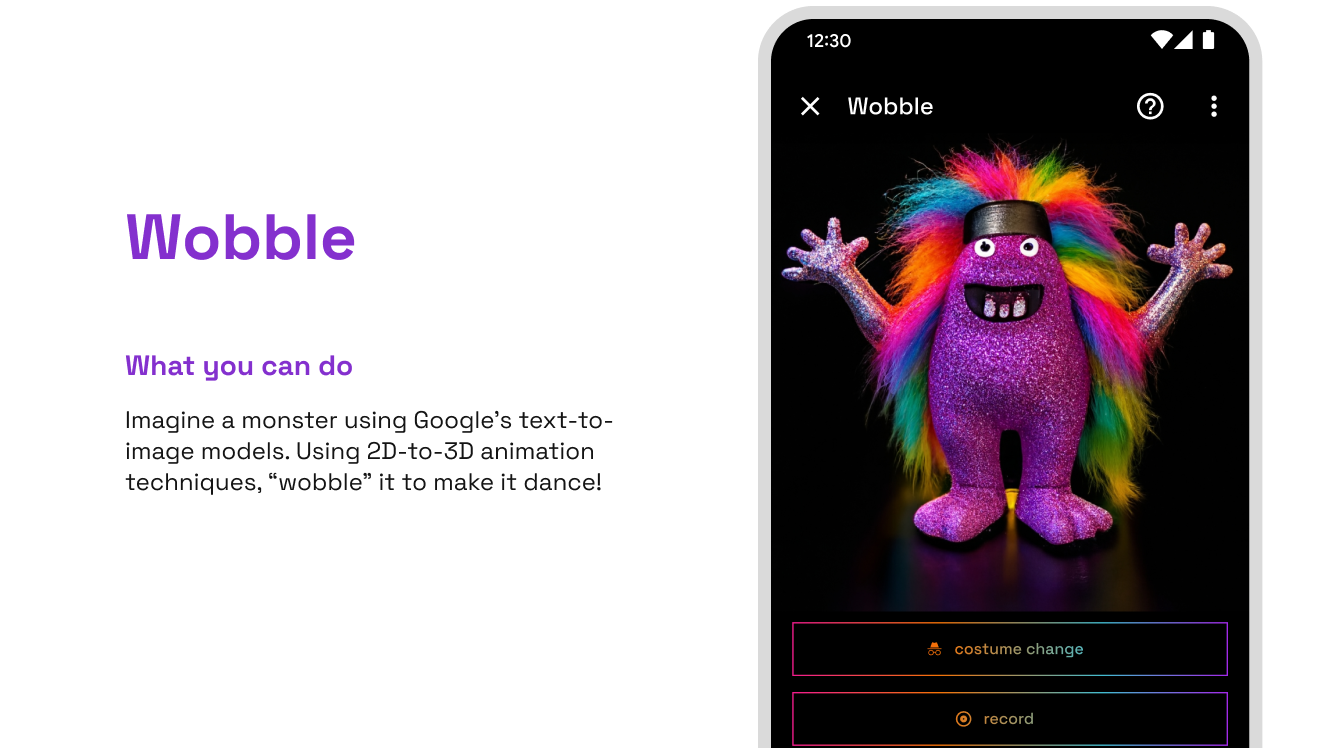

Now, Google says it’s adding a limited Imagen demo to its AI Test Kitchen app in a “Season 2” update. (Season one included demos showing off LaMDA, the company’s text based chatbot a certain Google researcher believed obtained the sentience of a small child). Users experimenting with Imagen can use a variety of odd words to have the AI cook up a personalised cityscape in its “City Dreamer.” Meanwhile, the “Wobble,” demo uses similar text prompts to let users create an AI-generated monster. Google hopes these extremely limited use cases will let users experience some of the tech and provide feedback while taking care to prevent significant unintentional harm.

Generative AI Videos That Actually Sort of Make Sense

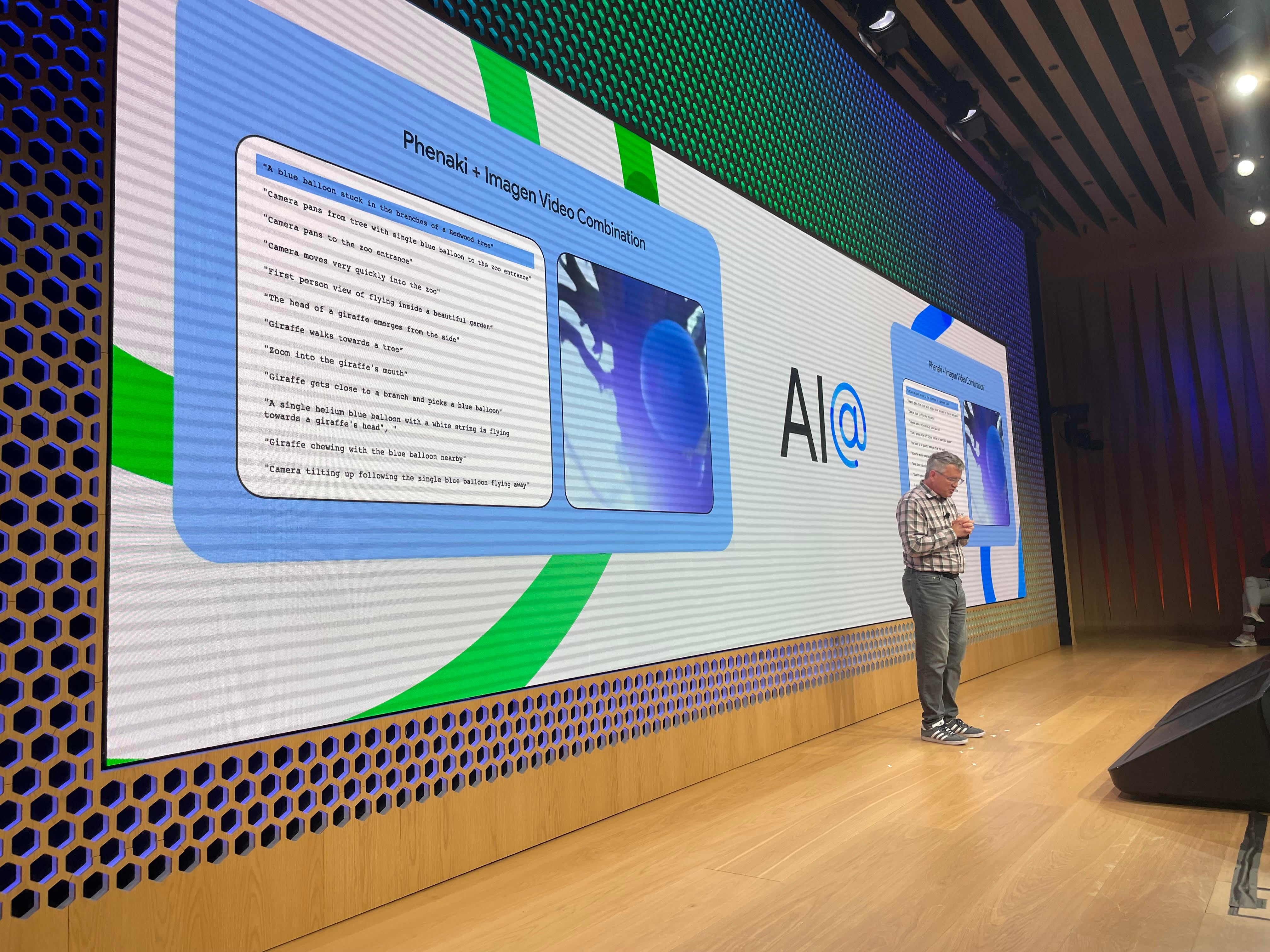

At the event Wednesday, Google showed off its answer to longer form AI generated video narratives. While previous platforms from Meta and others already offer brief rudimentary AI generated videos based off of text prompts, Google demoed a “AI-generated-super-resolution video” capable of creating a reliably coherent, 45 second story by combining its Imagen image generator and Phenaki video generator debuted last month.

The demo followed a single helium blue balloon as it swirled around a park before eventually intersecting with a wandering giraffe. Every few seconds of that video were accompanied by a series of related text commands displayed on an adjacent screen. When combined, the prompts and responses created a somewhat coherent video that followed a structure. To be clear, the generated videos aren’t heading to The Criterion Collection anytime soon, but they did seem like a marked improvement from previous examples.

Speaking during the AI@ event, Google Brain Principal Scientist Douglas Eck said it’s “surprisingly hard” to generate videos that are both high resolution and coherent in time. That combination of visual quality and consistently over time are essentially for movies or really any other medium attempting to use images and videos to tell a coherent story.

“I genuinely think it’s amazing that we talk about telling long form stories like this,” Eck said.

Flood Detection Added in 18 New Countries

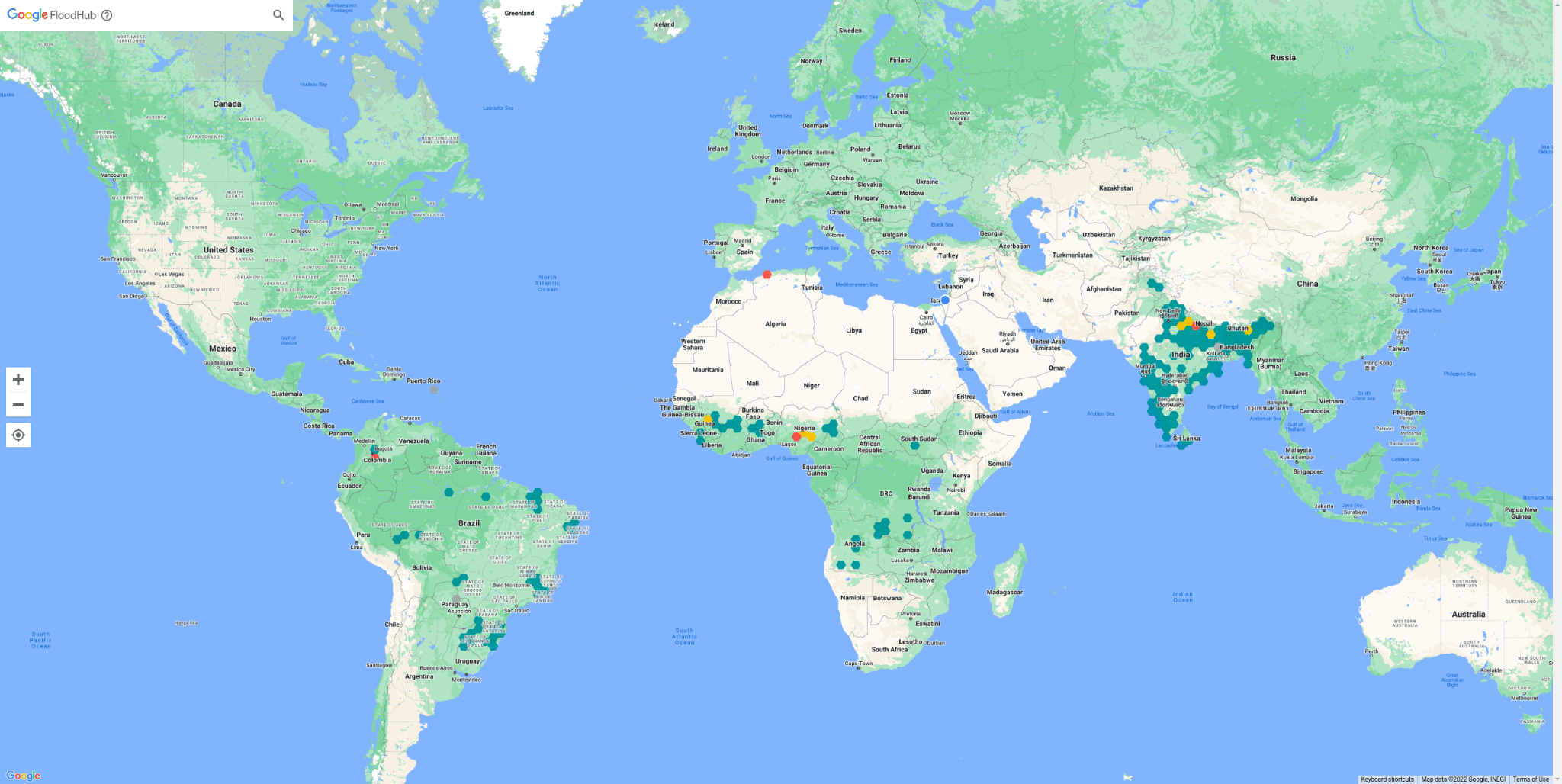

In some of the more utilitarian use cases, Google said new AI advancements will improve its ability to monitor and track flooding and wildfires around the world. The company announced the launch of a new Flood Hub platform which will attempt to analyse large weather data sets to display when and where flooding could occur in 20 counties.

Google started using AI to predict flood patterns back in 2018 in India’s Patna region. Three years later, an expanded version of that program helped send 115 million flood notifications to an estimated 23 million people in India and Bangladesh via Google Search and Maps. At the AI@ event, Google announced it added 18 new countries — mostly located in sub-saharan Africa — to that program this week. If it works as intended, Google said FloodHub could potentially predict flood forecasts in impacted areas up to seven days in advance.

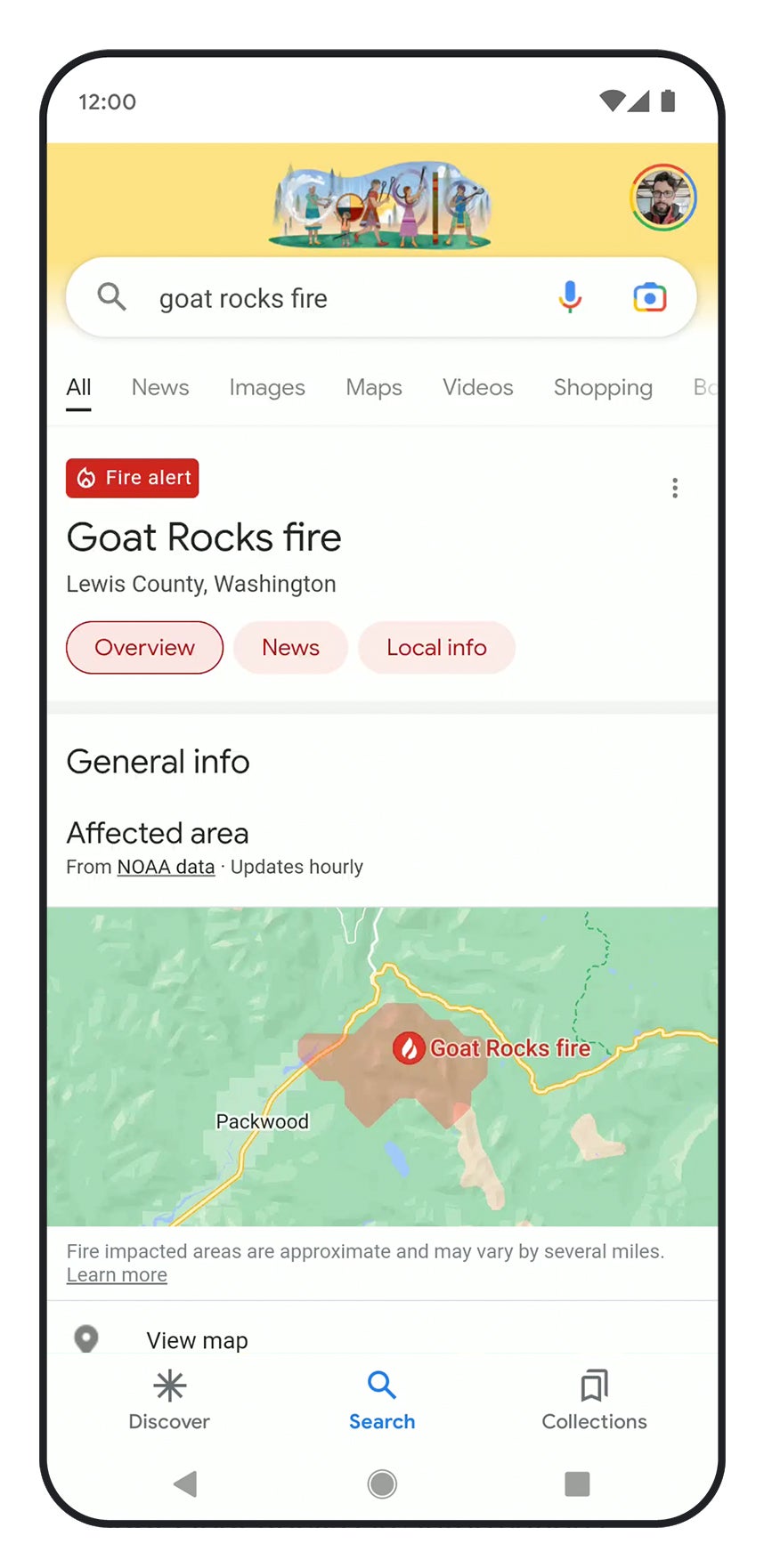

Wildfire Detection Aided by Satellite Imagery

Google will also expand its use of satellite imagery to train its models to better identify and track the spread of wildfires. The company hopes its wildfire tracking system, which now includes the United States, Canada, Mexico, and parts of Australia, could provide much needed insights for firefighters responding to disasters on the ground in real time.

Google Will Undertake Ambitious “1,000 Languages Initiative”

Google Translate has come a long way since it launched nearly twenty years ago in 2006. What was once a trivial translator tool barely capable of helping kids cheat on elementary school Spanish tests has evolved to an indispensable quick hit Rosetta Stone for travellers and students alike. Well, some of them at least. Critics of Google, and other western translation tools have, for years, claimed those tools failed to provide anywhere near the same quality of products to less widely spoken languages.

In an effort to address some of those shortcomings, Google on Wednesday announced a multi-year effort to create an AI language model capable of supporting 1,000 of the world’s most spoken languages. As part of that initiative, Google’s developing a so-called Universal Speech Model which it says is trained on 400 different languages. Speaking at the AI@ event, Google Brain leader Zoubin Ghahramani said that Universal Speech Model represents, “largest language model coverage seen in a speech to date.”

Google Tries to Course Correct on Responsible AI

A recurring theme at Google’s AI@ event was one of supposed reflection and cautious optimism. That sentiment rang particularly true on the topic of AI Ethics, an area Google’s struggled with in the past and one increasingly important in the emerging world of wild-generative AI. Though Google touted its own AI principles and Response AI teams for years, it has faced fierce blowback from critics, particularly after firing multiple high-profile AI researchers.

Google Vice President of Engineering Research Marian Croak acknowledged some potential pitfalls presented by the technologies on display Wednesday. Those include fears around increased toxicity and bias heightened by algorithms, further degrading trust in news through deep fakes, and misinformation that can effectively blur the distinction between what is real and what isn’t. Part of that process, according to Croak, involves conducting research that creates the ability for users to have more control over AI systems so that they are collaborating with systems versus letting the system take full control of situations.

Croak said she believed Google’s AI Principles put users and the avoidance of harm and safety “above what our typical business considerations are.” Responsible AI researchers, according to Croak, conduct adversarial testing and set quantitative benchmarks across all dimensions of its AI. Researchers conducting those efforts are professionally diverse, and reportedly include social scientists, ethicists, and engineers among their mix.

“I don’t want the principles to just be words on paper,” Croak said. In the coming years, she said she hopes to see the capabilities of responsible AI embedded in the company’s technical infrastructure. Responsible AI, Croak said, should be “baked into the system.”