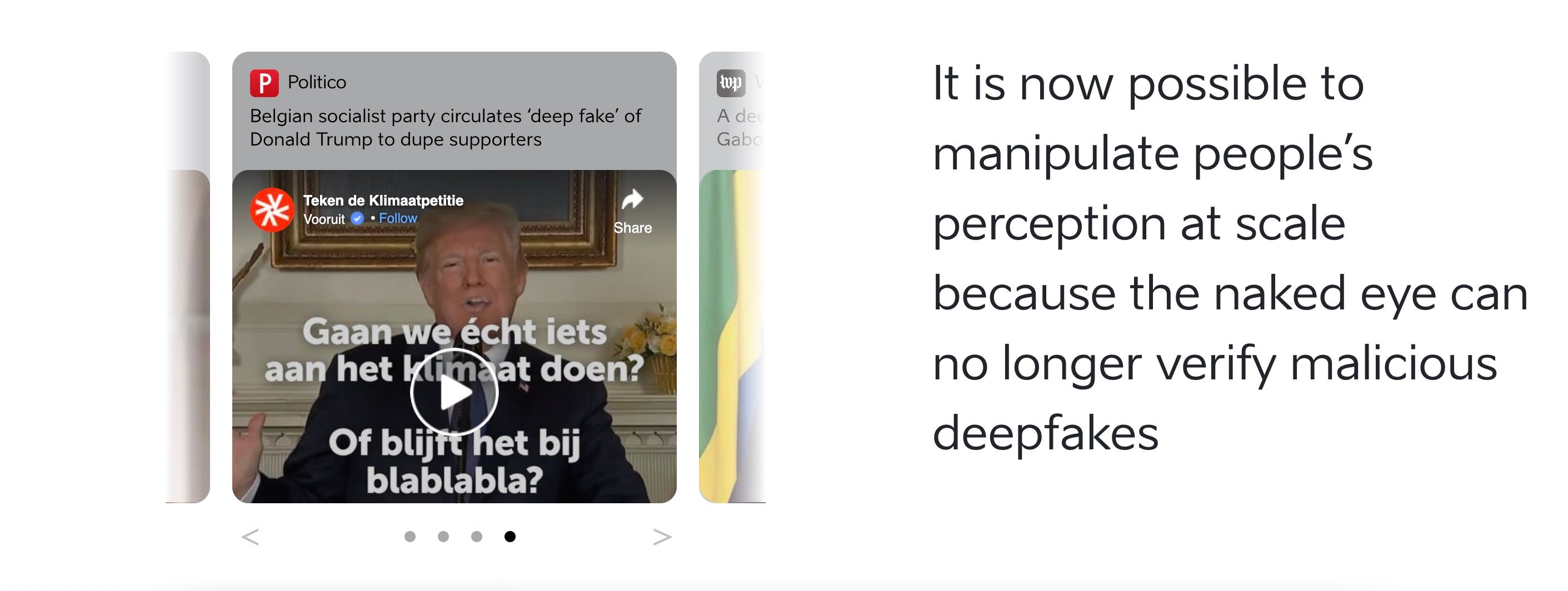

When an AI-generated image of an explosion occurring outside the Pentagon proliferated on social media earlier this week, it provided a brief preview of a digital information disaster AI researchers have warned against for years. The image was clearly fabricated, but that didn’t stop several prominent accounts like Russian state-controlled RT and Bloomberg news impersonator @BloombergFeed from running with it. Local police reportedly received frantic communications from people believing another 911-style attack was underway. The ensuing chaos sent a brief shockwave through the stock market.

The deepfaked Pentagon fiasco resolved itself in a few hours, but it could have been much worse. Earlier this year, computer scientist Geoffrey Hinton, referred to by some as the “Godfather of AI,” said he was concerned the increasingly convincing quality of AI-generated images could lead the average person to “not be able to know what is true anyone.”

Startups and established AI firms alike are racing to develop new AI deepfake detection tools to prevent that reality from happening. Some of these efforts have been underway for years but the sudden explosion of generative AI into the mainstream consciousness by OpenAI’s DALL-E and ChatGPT has led to an increased sense of urgency, and larger amounts of investment, to develop some way to easily detect AI falsehoods.

Companies racing to find detection solutions are doing so across all levels of content. Some, like startup Optic and Intel’s FakeCatch, are focusing on sussing out AI involvement in audio and videos while others like Fictitious.AI are focusing thier efforts more squarely on text generated by AI chatbots. In some cases, these current detection systems seem to perform well, but tech safety experts like former Google Trust and Saftey Lead Arjun Narayan fear the tools are still playing catch up.

“This is where the trust and safety industry needs to catch up on how we detect synthetic media versus non-synthetic media,” Narayan told Gizmodo in an interview. “I think detection technology will probably catch up as AI advances but this is an area that requires more investment and more exploration.”

Here are some of the companies leading the race to detect deepfakes.

Intel FakeCatcher

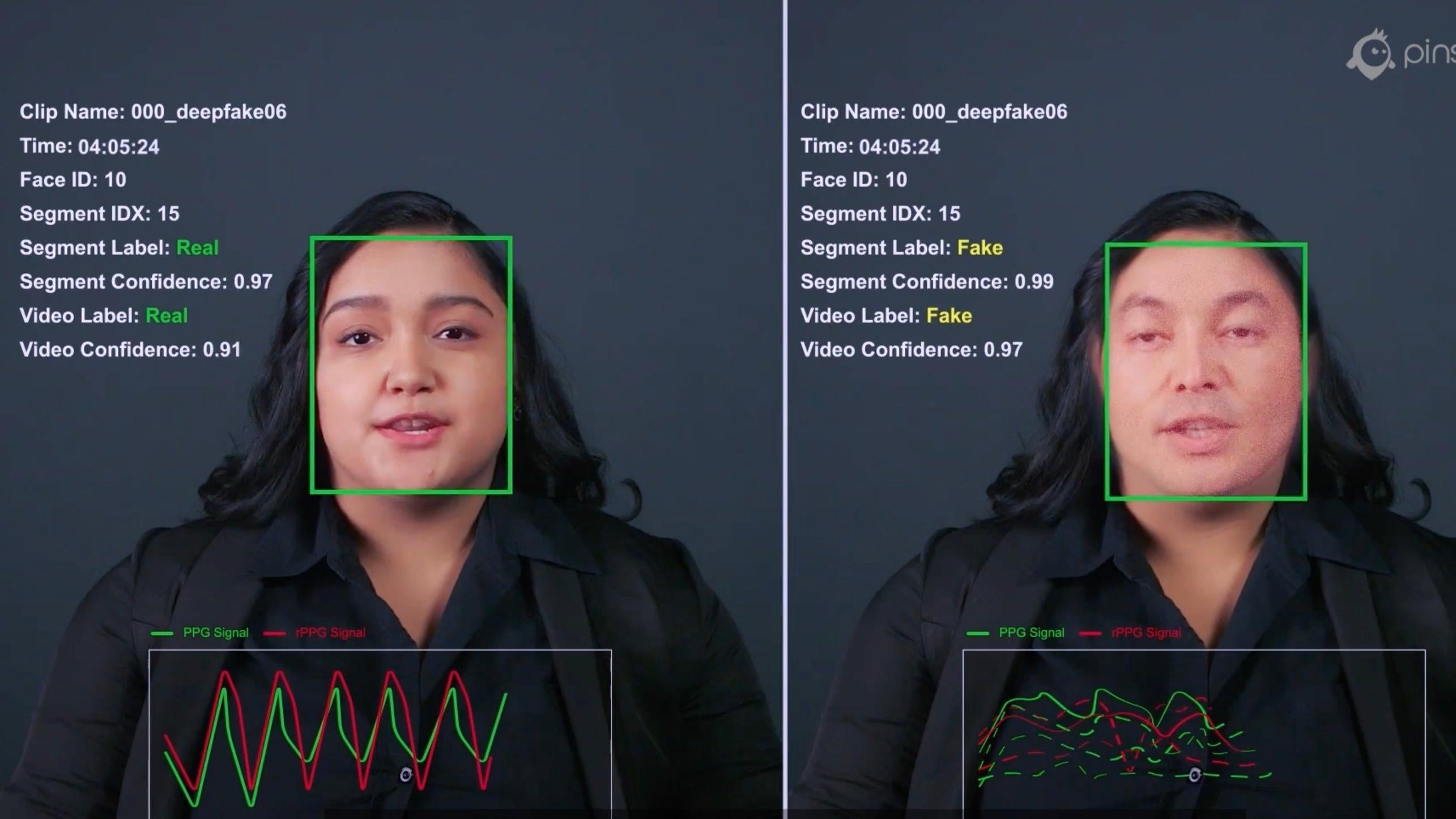

Chipmaker Intel isn’t a stranger when it comes to AI research. Now, the storied tech giant is using that knowledge to create FakeCatcher, a “real-time” deepfake detecting technology it claims can spot AI videos 96% of the time.

Unlike other detectors that look at the raw source code of a file or document, FakeCatcher actually analyses faces in the media to spot changes in blood flow that it says only occurs in real humans. The system also eye-gazed based detection to suss out whether a subject is human or AI-generated.

“We ask the question, what is the truth in humans?” Intel Labs Senior Staff Research Scientist Ilke Demir said. “What makes us humans?”

Microsoft

Long before Microsoft tried to revive its Bing search with ChatGPT integration, Big Tech giant was looking into ways to detect deepfakes. In 2020, the company announced Microsoft Video Authenticator which analyses photos or vidoes and spits out a confidence score judging whether or not the content was digitally manipulated. Microsoft says its detector is able to do this, in part, by detecting fading grayscale elements and minute details possibly undetectable to the human eye alone.

Microsoft’s detector seemed advanced for its time but it’s unclear how well the same system would stack up against the flurry of new advances in AI image generators that have popped up over the past three years. The company even admitted its detection tools can only go so far since the technology powering deepfake continues to evolve at such a rapid clip.

“We expect that methods for generating synthetic media will continue to grow in sophistication,” Microsoft said at the time.

DARPA

DARPA, the US military’s no-holds-bar research and development division, has had a program investigating ways to detect synthetic materials since at least 2019. DARPA’s Semantic Forensic (SemaFor) program is focused on creating “semantic and statistical analysis algorithms” capable of defending against manipulated images to prevent the spread of disinformation. The program is also developing attribution algorithms to determine the origin of a faked image and try to suss out whether it was developed for benign purposes or potentially part of harmful disinformation attacks.

OpenAI

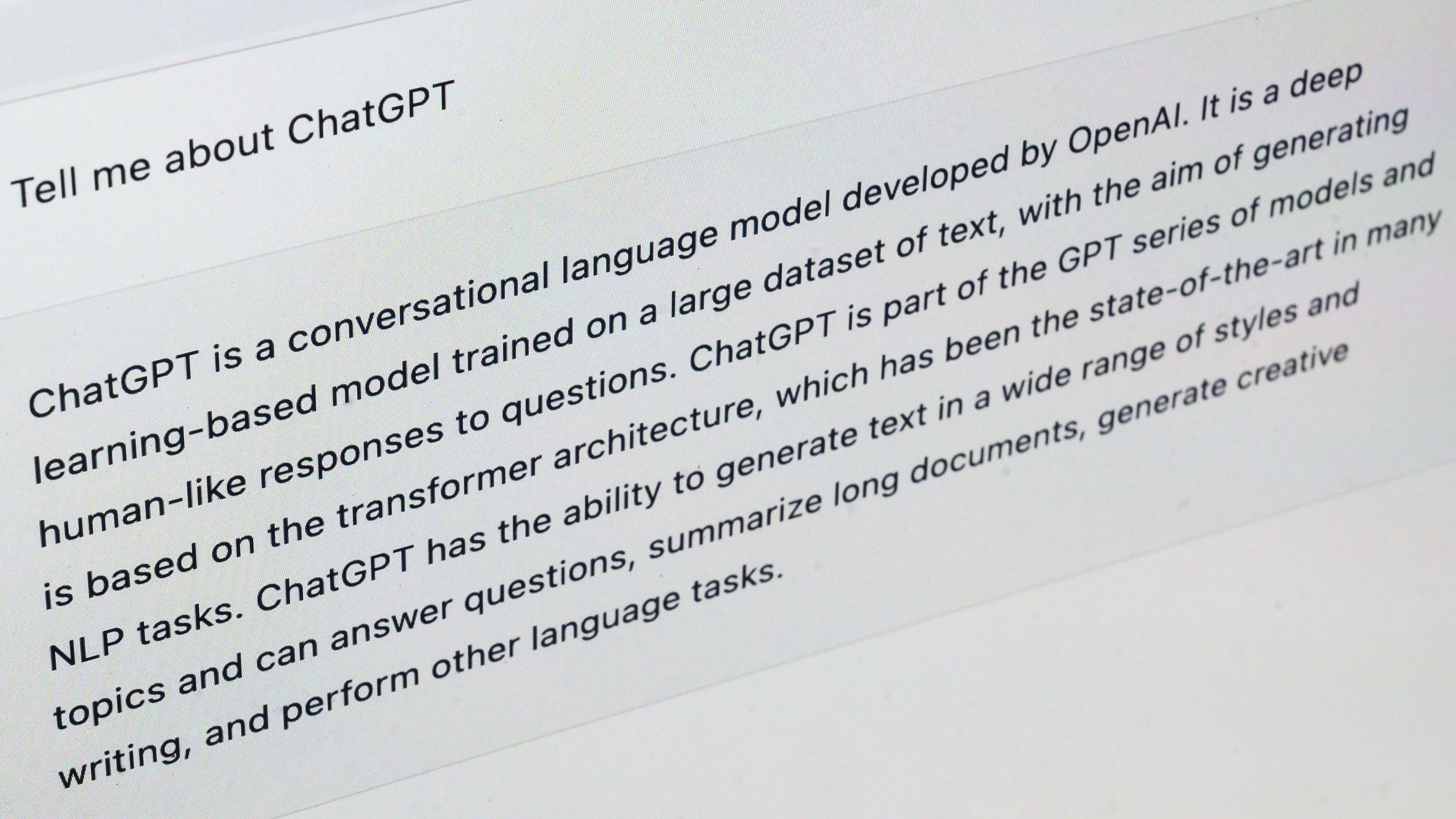

OpenAI has almost single-handily managed to fast-forward mainstream adoption of generative AI with its popular DALL-E image generators and ChatGPT. Now, it’s trying to develop AI classifiers to prevent those products from getting out of hand. The classifier, which is limited to text, attempts to scan through writing and make a determination as to whether not it was written by a human.

So far, it isn’t doing great. In its own evaluation, OpenAI says its classifier was only able to correctly identify 26% of AI-authored texts as “likely AI written. Worse still, the same system incorrectly labelled 9% of human-authored content as AI-written.

“Our classifier is not fully reliable,” OpenAI wrote. “It should not be used as a primary decision-making tool, but instead as a complement to other methods of determining the source of a piece of text.”

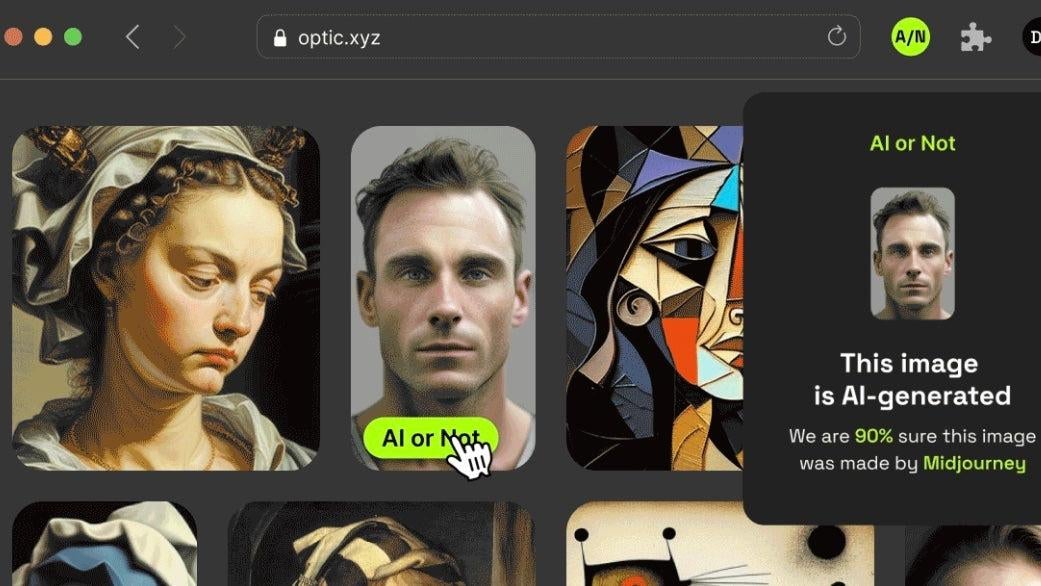

Optic

Deepfake-detecting startup Optic boldly claims it can identify AI-generated images created by Stable Diffusion, Midjourney, DALL-E, and Gan.ai. Users simply have to drag and drop the questionable image in question into Optic’s website or submit it to a bot on Telegram and the company will spit out a verdict.

Optic presents a percentage showing how confident its system is that the submitted image is AI-generated. It also attempts to determine which particular model was used to create the image. Though Optic claims it’s able to correctly identify AI-generated images 96% of the time, it’s not foolproof. Gizmodo asked Optic’s model to determine the authenticity of the widely debunked image depicting a supposed explosion near the Pentagon and Optic determined it was “generated by a human.”

GPTZero

GPTZero is a classification model that claims to be able to quickly detect if text was generated by OpenAI’s ChatGPT, GPT3, GPT4, or Google’s Bard. After submitting text, GTPZero spits out a score specifying the probability that a document was potentially AI-generated. GPTZero says its tool can be used to analyse a wide assortment of English prose though the most apparent use case appears to be for educators trying to determine if students are using AI to breeze their way through essays.

More than 6,000 teachers from Harvard, Yale, and the University of Rhode Island have reportedly used the tool according to The New York Times.

Sentinel

Sentinel works with government and media defence agencies around the world in an effort to create a so-called “trust layer for the internet.” The company reportedly relies on four different, separate layers of deepfake defence. On the most basic layer, Sentinel scans documents for evidence of hashes (basically digital breadcrumbs) associated with known deepfaked materials. Other deeper layers of defence use AI to analyse faces for signs of visual manipulation and another that looks for changes in audio.

“My family used to live under the world’s biggest disinformation apparatus — the Soviet Union,” Sentinel CEO Johannes Tammekänd said. “That’s why we know firsthand the consequences of information warfare and why it is one of the biggest threats to democracies.

Reality Defender

Founded just two years ago, Reality Defender offers enterprise-level tools letting businesses and other large organisations quickly scan audio, photo, and video for evidence of digital manipulation. It’s unclear what exact AI models Reality Defender is capable of detecting, however, the company claims its system was used by NATO’s Strategic Communications Centre of Excellence to identify deepfakes and other forms of misinformation being amplified by pro-Russian accounts on the social media site VKontakte.

Attestiv

Massachussets-based Attestiv uses its own AI to “forensically scan” photos, videos, documents, and even telemetry data submitted to the company or captured on its apps to detect anomalies that could point to digital manipulation. Once scanned. Attestiv offers up an “aggregate tamper score” estimating the likeliness of the image being AI-generated or altered. If manipulation is detected Attestiv then stores a digital copy of that image on a blockchain ledger so that it can easily spot cases where the manipulated media occurs again.

Blackbird.ai

Initially formed in 2014, Blackbird.AI is one of the older companies in the field using AI to catch potential deepfakes and limit their spread online. Unlike other newer technology companies focused on scanning documents for minute signs of digital manipulation, Blackbird spends most of its energy on identifying and limiting the spread of already identified AI-generated or altered images.

Blackbird analyses social media trends in real-time and investigates numerous “media risks,” to identify and squash disinformation or influence campaigns before they get out of control. Increasingly, those malicious campaigns may rely on AI-generated images to trick or fool audiences into believing a false narrative like fake pictures of Donald Trump’s arrest.

Fictitious.AI

Anyone whose spent time in a college classroom over the past decade knows plagiarism detectors aren’t anything new. Many of those systems that simply scan for word-for-word text already on the internet were unable to account for ChatGPT and other chatbots which appear to generate new, “unique” content based on a user’s prompts.

Fictitious.AI is trying to fill that gap. The company, which targets its products specifically towards educators, claims its own AI models can detect lines of text generated by models like GPT4 and ChatGPT. The company provides educators with an interactive console where they can submit a student’s work and then view the results. What teachers or professors actually do with that score, however, remains up to them.

Sensity AI

Netherlands-based company Sensity AI claims it can detect AI-generated images from models like Dall-E, Stable Diffusion, and Midjourney as well as text pulled from OpenAI’s GPT3. Unlike other companies on this list, Sensity AI also points out that its model can accurately detect instances of digital face swapping. Sensity says face swapping in particular is used by fraudsters trying to impersonate victims and break into accounts locked by face scans or other biometric identifiers.