Apple is embracing AI for to grant a new suite of accessibility features to its phones, tablets, and laptops. Though the tech giant had previously announced new accessibility features in May including last year’s on-device live captions, these bevy of new features could help those with cognitive, speech, and mobility disabilities. There’s even a new AI detection feature that could make it much easier for those with vision issues to select the right options on a microwave or the right floor on an elevator, as well as an AI that can learn how to mimic your voice.

On Tuesday, Apple announced it planned to add several new features to some of its most-common apps on iPhone and iPad. Though the Cupertino company did not provide dates for these upcoming quality of life improvements, there’s new planned accessibility options both big and small coming to iPhone, iPads, and Macs.

Front and centre is a new Assistive Access feature that takes some of the iPhone’s most common apps including Photos, Phone, Messages, Camera, Music, and FaceTime, then drastically simplifies the UI.

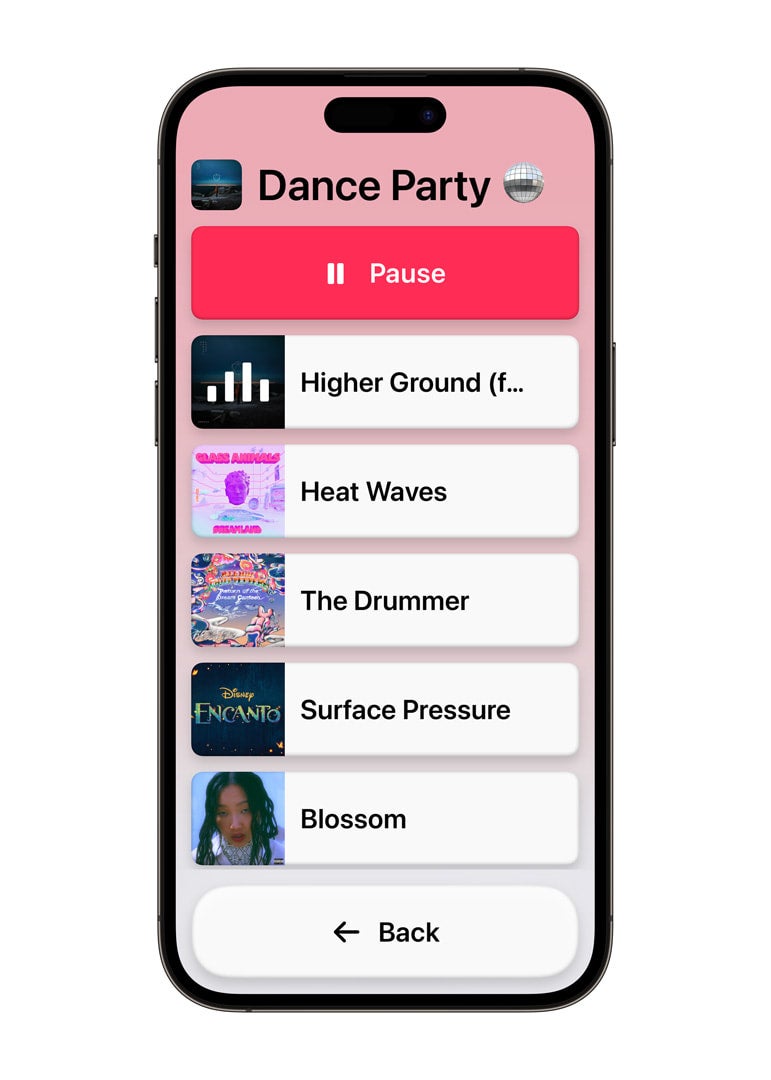

Messages will include a new, larger emoji-only keyboard and a new option to record video messages. The home screen and apps also feature new, optional grid-based layouts. For instance, Camera with Assistive Access enabled will showcase the view through the lens with a large “Take Photo” button highlighted in yellow at the bottom of the screen. Apple’s Photos app will display two rows of photo tiles, while Calls will highlight recent conversations with large names and profile images displayed directly on the screen. Apple Music is also getting a facelift under this new setting, highlighting songs and their album covers in an easy-to-read, tiled format.

In addition, the company is introducing several smaller changes, such as more adjustable text size in app like Finder, Mail, Messages, Calendar, and Notes. Users can now more easily pause moving images such as GIFs in Messages and Safari, and there will soon be more phonetic suggestions for users trying to emphasise the right command (it’s “do,” not “dew) when using Voice Control. Apple will also now allow users to pair hearing devices compatible with iPhones to Macs.

A new way for pressing the right buttons with vision disabilities

Apple is utilising modern machine learning-based AI with a new Point and Speak feature in the Magnifier app. Essentially, the feature tracks users’ finger movements in front of the camera lens, then reads off the text the user is pointing to. As you can see in the GIF at the top of the page, Apple used the example of a microwave, where the app read off each button every time a finger hovered in front of it.

Apple said this new feature uses the phone’s camera as well as LiDAR and AI to read and then pronounce the text. The new feature should also work with current Magnifier features that describe images and detect people and doors.

iPhone, iPad, and Mac will be able to sound like you

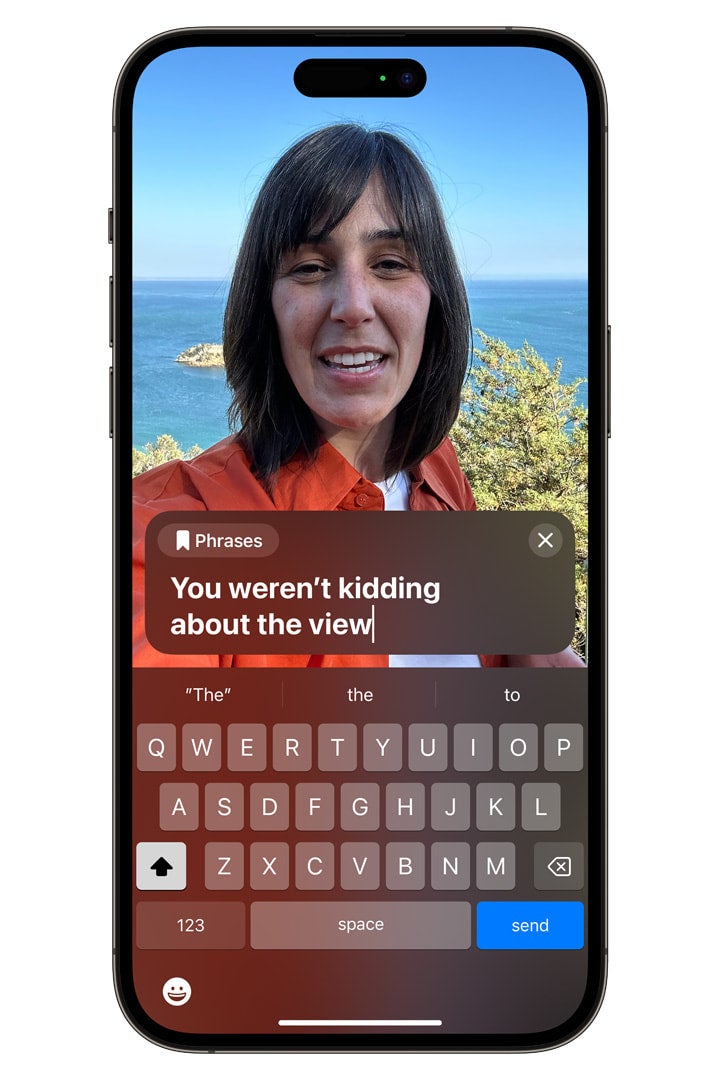

Apple said it’s drastically improving its text-to-speech capabilities across its user-end platforms for users with speech impairments. This upcoming Live Speech feature will work during phone and FaceTime calls, as well as for when users need it during in-person conversations.

Users should also be able to record some of their more commonly-used expressions for quick access. The Cupertino company is also adding the ability for users to record their speech patterns, a way to — in the company’s words — “create a voice that sounds like them” for when they are at risk of eventually losing their speech. The Personal Voice app asks users to read a random assortment of text prompts equaling about 15 minutes of audio. The system uses AI to then generate speech that’s equivalent to your personal style of speaking.

The system could be similar to existing platforms like ElevenLabs. While those AI systems have been awash in allegations of people stealing and replicating other people’s voices, this new feature is tied to the users’ iPhone, and it should also work with Live Speech once it’s eventually released.

Apple’s forays into AI-powered accessibility

As we mentioned before, Apple has made multiple accessibility announcements in the past in time for Global Accessibility Awareness Day. Past efforts have included gesture controls and ASL support and even a few so-called “accessibility emojis.”

What’s interesting about this year is how much of the new features are centered around machine learning, or more succinctly modern artificial intelligence systems. The company has been pretty mum on any of its AI initiatives, especially on if it was working on any first-party generative AI systems. These new features could point to how Apple is trying a different tact than the likes of Google and Microsoft, which are shoving language models and text-to-image generators into practically every user-end product.

These features could become just a few AI highlights at Apple’s upcoming WWDC in June.