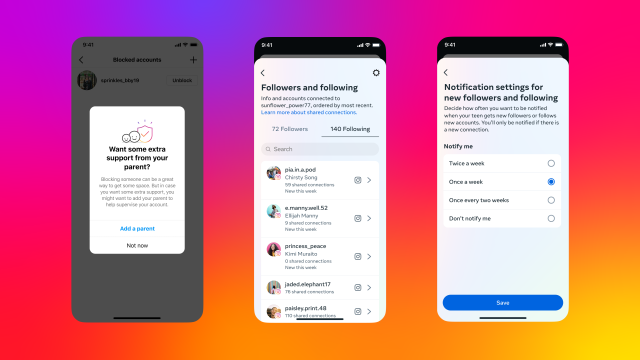

Meta has unveiled a suite of new safety tools intended to give parents more oversight of their teen’s activity on Messenger and Instagram DMs. Starting today, parents and guardians can view how much time their teen spends on Messenger, receive updates on changes to their contact lists and privacy settings, and receive a notification if their teen decides to block another user. Parents can also now review which types of users — friends, friends of friends, or no one — can message their teens, though they can’t change that setting. Meta told Gizmodo these features notably don’t let parents read the contents of teens’ messages on either Messenger or Instagram DM.

The new safety tools arrive just weeks after the publication of a damning Wall Street Journal investigation exposing the ways pedophiles allegedly manipulate Instagram to purchase and sell underage sex content. State bills attempting to force social media companies to obtain parental consent before offering services to minors are also cropping up across the country. The changes mark Meta’s latest attempt to recover from a long history of reports and leaked documents allegedly linking its products to teen harm.

“Today’s updates were designed to help teens feel in control of their online experiences and help parents feel equipped to support their teens,” Meta said. “We’ll continue to collaborate with parents and experts to develop additional features that support teens and their families.”

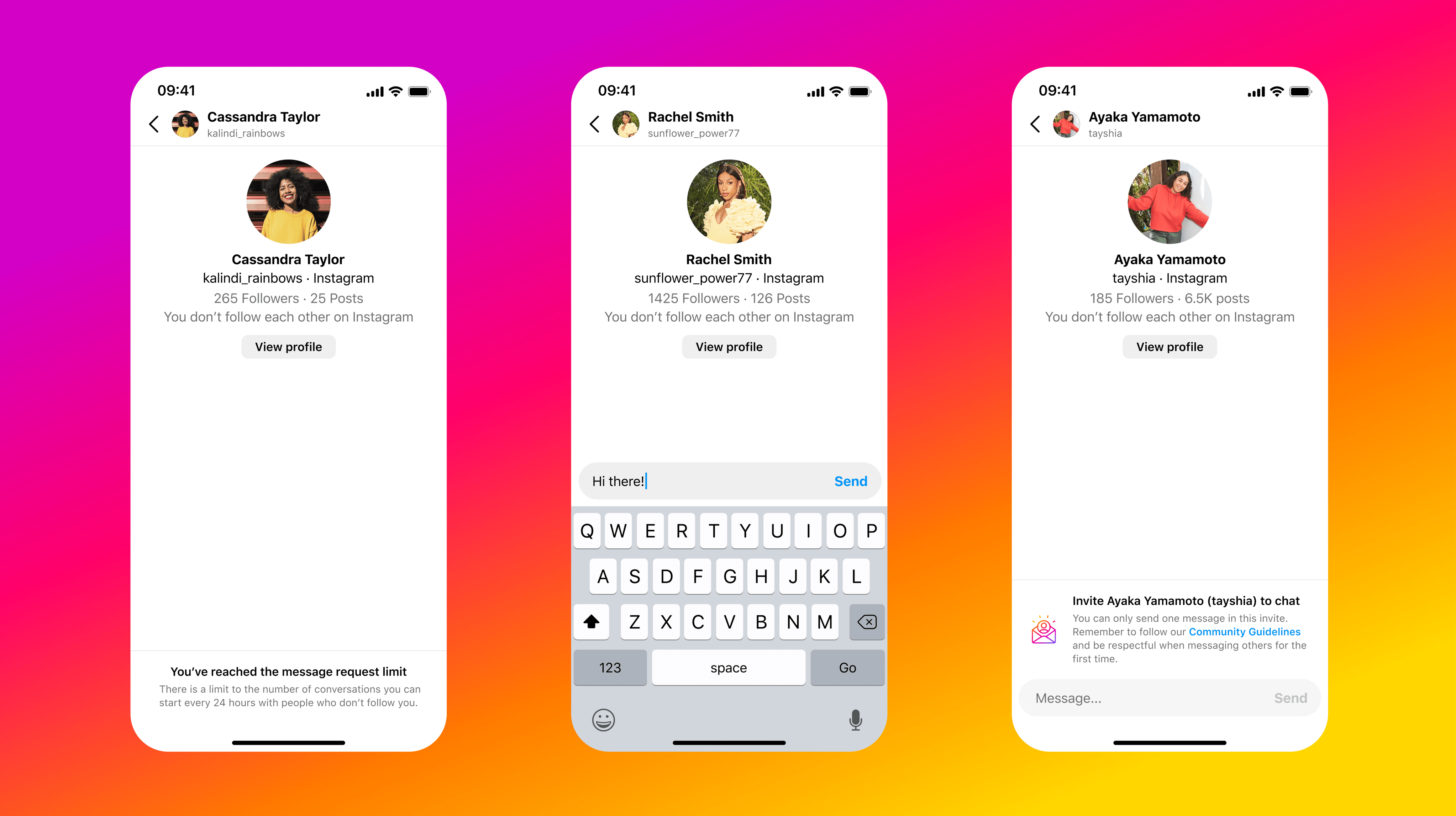

On Instagram DMs, Meta is testing a feature that forces users to send an invite and receive permission to connect before they can message a user who doesn’t follow them. The new feature isn’t specific to teens and could be implemented across all of Instagram. In practice, it sounds similar to the invite tool currently used by LinkedIn. Meta will limit these invites to text in an effort to prevent users from receiving unwanted images or videos.

These new Instagram guardrails come around six months after Meta launched “Quiet Mode” which temporarily pauses notifications and sends an auto-reply to other users letting them know a user is unavailable. Meta says Quiet Mode will become available to Instagram users globally in the coming weeks.

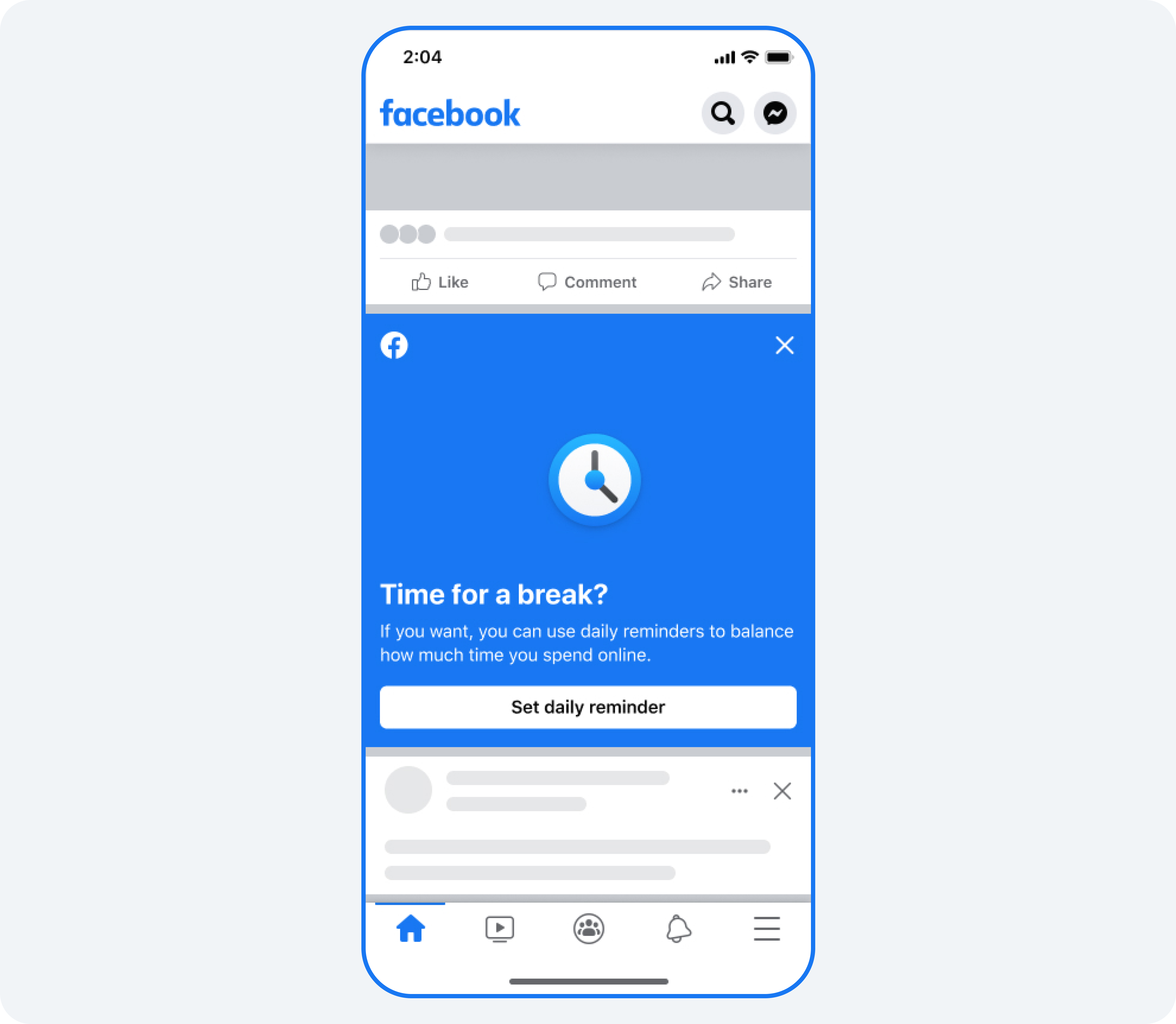

Meta also introduced several new “nudges” meant to inform young users who may be getting sucked into a doom-scrolling session. A new notification on Facebook will tell young users to consider taking a break after using the app for more than 20 minutes. The company says it’s also testing a feature that will nudge teens to close the Instagram app if they are scrolling Reels late at night. Both of those features come in the wake of a growing body of scholarly research that shows a strong correlation between prolonged screen time and signs of increased anxiety and depression in certain young users. US Surgeon General Vivek Murthy recently pointed to prolonged screen time as one of several “ample indicators” that social media poses a “profound risk of harm” to children’s mental health and development.

Parents will also have new, broader visibility into their teen’s use of Instagram. Now, when teen user blocks someone, they will receive a notification encouraging them to add their parents as a supervisor to their account. Parents will also have the ability to see how many friends their teen has in common with an account. Meta hopes these tools and insights could prompt teens to start more conversations with their parents about their social media usage.

Meta tries to jump out ahead of new child safety legislation

The new safety tools come just as lawmakers around the country consider new legislation forcing social media companies and other tech firms to implement stronger privacy protections for young users. Just about everyone agrees more can be done to prevent harm to young users, but states are widely divergent on how to actually implement those changes. Some states like California and Minnesota are pursuing legislation that would place strict limits on the types of data companies can collect on minors. Others like Texas and Utah are saying to hell with it and banning tech companies from opening accounts for teens altogether unless the young users first receive parental consent.

The more extreme state privacy laws don’t just stop at getting a parent’s ok though. In Texas, a recently passed law called Securing Children Online Through Parental Empowerment grants parents or guardians vast control over kids’ accounts by letting them review and download personal identifying information associated with the minor and similarly request the deletion of any of it. In Utah, one of the state’s recently passed laws would ban anyone under the age of 18 from using social media between apps 10:30 PM and 6:30 AM.

Tech companies, including Meta, have vigorously opposed this wave of legislation, arguing the bills are overly broad and could counterintuitively force them to collect more sensitive data on young users in order to comply with various age verification requirements.

“There’s a very real and complex challenge because inevitably people move toward asking for IDs which creates all kinds of access to information that you might not really want us to have,” Meta’s vice president of safety Antigone Davis said during the committee hearing earlier this year, per Axios. Meta did not comment when asked about the rise in legislation targeting young users on social media.