When protests in Pakistan earlier this year escalated into clashes between pro-government forces and supporters of former Prime Minister Imran Khan, the now-imprisoned leader turned to social media to bolster his message. Khan shared this brief video clip on Twitter showing his supporters holding up signs with his face on them and chanting his name. The clip ends with a still shot of a woman in an orange dress boldly standing face to face with a line of heavily armoured riot police.

“What will never be forgotten is the brutality of our security forces and the shameless way they went out of their way to abuse, hurt, and humiliate our women,” Khan tweeted. The only problem: The image showing the woman bravely standing before the police wasn’t real. It was created using one of many new AI image generators.

Khan isn’t the only political leader turning to AI deepfakes, for political gain. A new report from Freedom House shared with Gizmodo found political leaders in at least 16 countries over the past year have deployed deep fakes to “sow doubt, smear opponents, or influence public debate.” Though a handful of those examples occurred in less developed countries in Sub-Saharan Africa and Southwest Asia, at least two originated in the United States.

Both former President Donald Trump and Florida Governor Ron DeSantis have used deepfaked videos and audio to try and smear each other ahead of the upcoming Republican presidential nomination. In Trump’s case, he used deep-faked audio mimicking George Soros, Adolf Hitler, and the Devil himself to mock DeSantis’ shaky campaign announcement on Twitter Spaces. DeSantis shot back with deepfake images purporting to show Trump embracing former National Institute of Health Director Anthony Fauci. Showing kindness to Fauci in the current GOP is tantamount to political suicide.

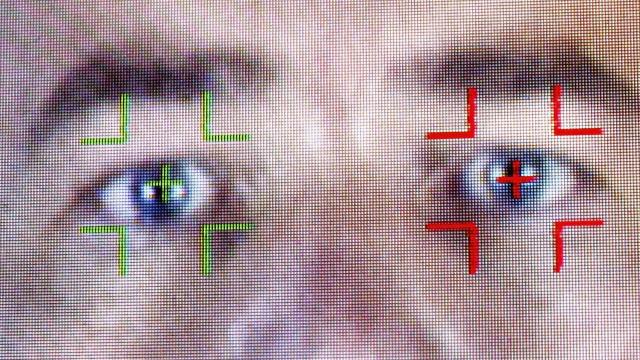

“AI can serve as an amplifier of digital repression, making censorship, surveillance, and the creation and spread of disinformation easier, faster, cheaper, and more effective,” Freedom House noted in its “Freedom on the Net” report.

The report details numerous troubling ways advancing AI tools are being used to amplify political repression around the globe. Governments in at least 22 of the 70 countries analyzed in the report had legal frameworks mandating social media companies deploy AI to hunt down and remove disfavored political, social, and religious speech. Those frameworks go beyond the standard content moderation policies at major tech platforms. In these countries, Freedom House argues the laws in place compel companies to remove political, social, or religious content that “should be protected under free expression standards within international human rights laws.” Aside from increasing censorship efficiency, the use of AI to remove political content also gives the state more cover to conceal themselves.

“This use of AI also masks the role of the state in censorship and may ease the so-called digital dictator’s dilemma, in which undemocratic leaders must weigh the benefits of imposing online controls against the costs of public anger at such restrictions,” the report adds.

In other cases, state actors are reportedly turning to private “AI for hire” companies that specialize in creating AI-generated propaganda intended to mimic real newscasters. State-backed news stations in Venezuela, for example, began sharing strange videos of mostly white, English-speaking news anchors countering Western criticisms of the country. Those odd speakers were actually AI-generated avatars created by a company called Synthesia. Pro-China government bot accounts shared similar clips of AI-generated newscasters on social media, this time appearing to rebuff critics. The pro-China AI avatars were part of an entirely fabricated AI news division supposedly called “Wolf News.”

The Freedom House researchers see these novel efforts to generate deepfake newscasters as a technical and tactical evolution of governments forcing or paying news stations to push propaganda.

“These uses of deepfakes are consistent with the ways in which unscrupulous political actors have long employed manipulated news content and social media bots to spread false or misleading information,” the report notes.

Maybe most troubling of all, the Freedom House report shows a rise in political actors calling videos and audio deepfakes that are in fact genuine. In one example, a prominent state official in India named Palanivel Thiagarajan reportedly tried to push aside leaked audio of him disparaging his colleagues by claiming it was AI-generated. It was real. Researchers believe an incorrect assumption that a video of former Gabon president Ali Bongo was faked may have helped spark a political uprising.

Though the majority of political manipulation and disinformation efforts discovered by Freedom House in the past year still primarily rely on lower tech deployment of bots and paid trolls, that equation could flip as generative AI tools continue to become more convincing and drop in price. Even somewhat unconvincing or easily refutable AI manipulation, Freedom House argues, still “undermines public trust in the democratic process.”

“This is a critical issue for our time, as human rights online are a key target of today’s autocrats,” Freedom House President Michael J. Abramowitz said. “Democratic states should bolster their regulation of AI to deliver more transparency, provide effective oversight mechanisms, and prioritize the protection of human rights.”