Leaders and policymakers from around the globe will gather in London next week for the world’s first artificial intelligence safety summit. Anyone hoping for a practical discussion of near-term AI harms and risks will likely be disappointed. A new discussion paper released ahead of the summit this week gives a little taste of what to expect, and it’s filled with bangers. We’re talking about AI-made bioweapons, cyberattacks, and even a manipulative evil AI love interest.

The 45-page paper, titled “Capabilities and risks from frontier AI,” gives a relatively straightforward summary of what current generative AI models can and can’t do. Where the report starts to go off the deep end, however, is when it begins speculating about future, more powerful systems, which it dubs “frontier AI.” The paper warns of some of the most dystopian AI disasters, including the possibility humanity could lose control of “misaligned” AI systems.

Some AI risk experts entertain this possibility, but others have pushed back against glamorizing more speculative doomer scenarios, arguing that doing so could detract from more pressing near-term harms. Critics have similarly argued the summit seems too focused on existential problems and not enough on more realistic threats.

Britain’s Prime Minister Rishi Sunak echoed his concerns about potentially dangerous misaligned AI during a speech on Thursday.

“In the most unlikely but extreme cases, there is even the risk that humanity could lose control of AI completely through the kind of AI sometimes referred to as super intelligence,” Sunak said, according to CNBC. Looking to the future, Sunak said he wants to establish a “truly global expert panel,” nominated by countries attending the summit to publish a major AI report.

But don’t take our word for it. Continue reading to see some of the disaster-laden AI predictions mentioned in the report.

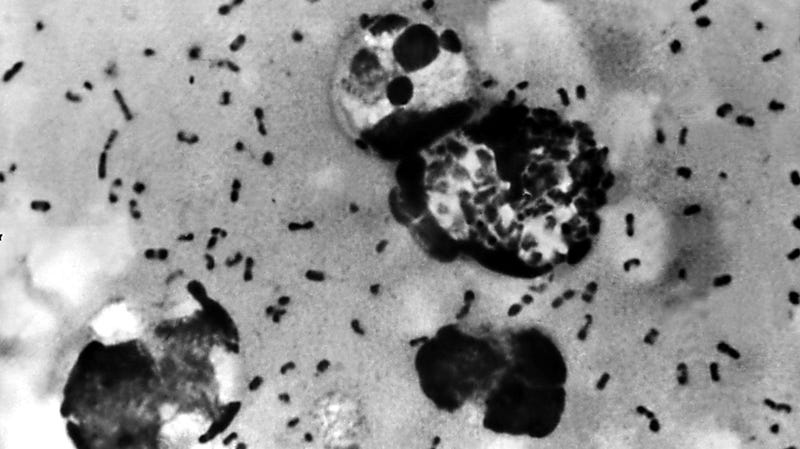

Evil-Doers Could Use AI to Create Deadly Biological or Chemical Weapons

No good AI doomsday scenario would be complete without the inclusion of deadly bioweapons. The UK discussion paper claims those scenarios, once reserved for the pages of an airport thriller paperback, may have some real validity. The paper cites previous studies showing how current “narrow” AI models already possess the ability to generate novel proteins. Future “frontier AI” connected to the internet could take that knowledge a step further and be instructed to carry out real-world lab experiments.

“Frontier AI models can provide user-tailored scientific knowledge and instructions for laboratory work which can potentially be exploited for malicious purposes,” the paper states. “Studies have shown that systems may provide instruction on how to acquire biological and chemical materials.”

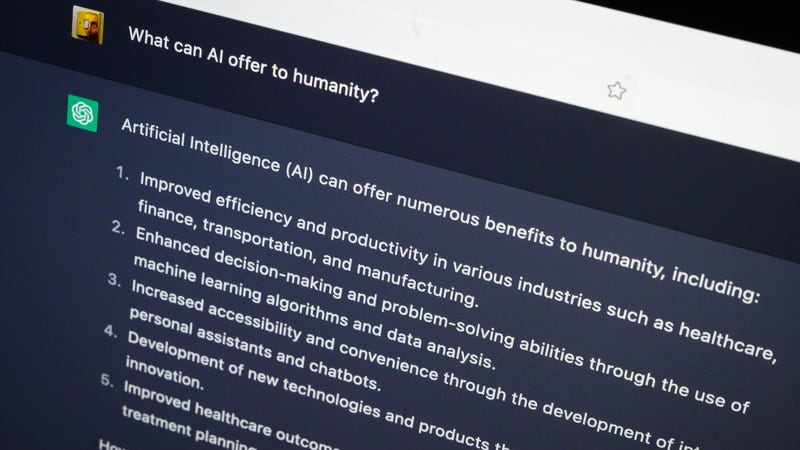

AI Models Could Saturate the Internet With Unreliable Information

AI-generated essays, scripts, and news stories churned out by ChatGPT-style chatbots are already flooding the internet. Though still relatively nascent, some have speculated that this type of AI-generated content could outnumber human-generated text. But even if that sounds like a stretch, it’s still possible the torrent of unreliable and difficult-to-verify AI-generated content could make it more difficult to find useful or relevant information online. As the UK paper notes, “information abundance leads to information saturation.”

All that AI content could, the paper warns, divert traffic away from traditional newsrooms, some of which are tasked with debunking the false claims those same models may spit out.

Scammers Could Use Fake AI Kidnappings to Torment People

Rapidly evolving advances in generative AI models capable of mimicking voices could lead to a new genre of particularly horrific scams: fake AI kidnappings. Scammers and extortionists, the report warns, can use AI-generated versions of a loved one’s voice to make it seem like they are in distress and ask for a ransom.

This particular threat isn’t hypothetical. Earlier this year, an Arizona mother named Jennifer DeStefano sat before a Senate Hearing and recounted in chilling detail how a scammer looking to make a quick buck used a deepfake clone of her teenage daughter’s voice to make it appear as if she was kidnapped and in danger.

“Mom, I messed up,” the deepfaked voice reportedly said between spurts of crying. “Mom these bad men have me, help me, help me.” Attacks like these, the report warns, could become even more common as the technology evolves and the quality of the audio clones improves.

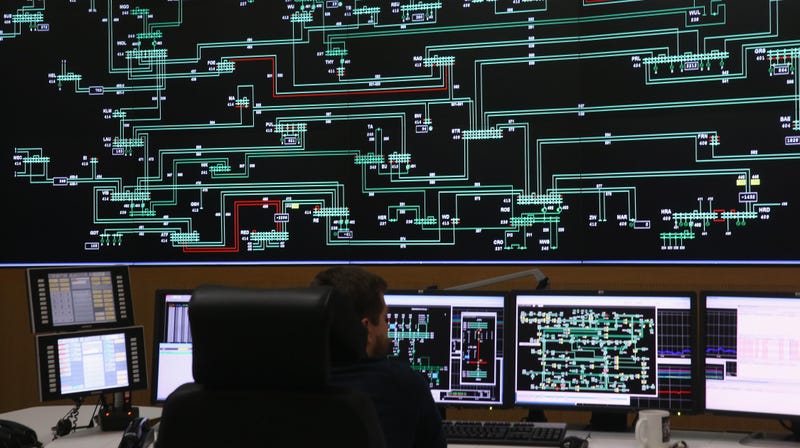

Critical Infrastructure Isn’t Ready for AI-Assisted Cyberattacks

Generative AI models could make it much easier for hackers and other bad actors to carry out malicious cyberattacks. The paper warns that just about anyone can use generative AI models to rapidly create tailored phishing attacks, even if they have little to no past experience. In some cases, the paper notes, AI tools have already been used to help hackers steal login credentials.

“The risk is significant given most cyber attackers use social engineering to gain access to the victim organization’s networks,” the paper notes.

On the more dystopian side of things, the paper warns future advanced AI systems could potentially access the internet and “perform their own cyberattacks autonomously.” Hackers could also try to train an adversary’s AI training set data, a method known as data poisoning, to get it to produce irrelevant or harmful results.

AI Could Radically Lower the Cost of Disinformation Attacks

Disinformation and misinformation campaigns can already deal plenty of damage without AI tools, but the introduction of generative models able to rapidly create misleading texts and images could make a difficult situation much worse.

The practically nonexistent cost and ease of use of AI image generators in particular lower the barrier of entry for malicious actors looking to sow chaos and confusion. We already saw a brief glimpse into this reality earlier this year, when an AI-generated image purporting to show an explosion outside the Pentagon sent Wall Street panicking.

“More actors producing more disinformation could increase the likelihood of high-impact events,” the report warns.

AI Could Generate Bespoke, Personalized Disinformation Campaigns

In addition to ramping up the pure output of disinformation, the report warns generative AI models could also amp up their quality. Specifically, the report warns of “hyper-targeted content” directed to specific users to mislead or deceive them. AI models could, they warn, create “bespoke” messages targeting individuals online rather than large groups. Worse still, those targeted attacks may improve over time as the AI models learn from past attacks.

“One should expect that as AI-driven personalised disinformation campaigns unfold, these AIs will be able to learn from millions of interactions and become better at influencing and manipulating humans, possibly even becoming better than humans at this,” the paper notes.

We Could Become Over-Reliant on AI to Complete Critical Tasks

Previous academic studies have shown humans have a tendency to place too much trust in technological systems. Under that same logic, the paper argues, society could begin offloading responsibility over critical areas to AI. That “overreliance on autonomous AI systems,” like infrastructure or energy grids, the report warns, could come back to bite us. In the dystopian future where those autonomous AI models aren’t designed with humanity’s best interest in mind, the machine may, drum roll, try to subtly take control. Oh yeah, we’re going there.

“As a result, AI systems may increasingly steer society in a direction that is at odds with its long-term interests,” the paper warns.

Advanced AI Could Try to Undermine Human Control

In the event humans do offload critical roles to misaligned AI, things could get really weird, really fast. The paper imagines scenarios in which rogue autonomous AI “actively seek to increase their own influence.” At a certain point, even if concerned humans wake up and realize what’s happening, it may be too difficult to stop, since the model now controls critical infrastructure.

In one example, the paper suggests, a bad actor could give an AI the goal of “self-preservation” which could see it break free of its human-imposed digital shackles. The report suggests this could be carried out by terrorists who want to watch the world burn or AI sympathizers who think advanced machines are the stage of evolution.

To state the obvious, it’s highly unlikely anything resembling this scenario would actually happen, at least given the current technological reality. Many experts in AI risk space, such as University of Washington Professor of Linguistics Emily M. Bender, even argue the mere suggestion of these overly doomer threats are irresponsible because they divert attention away from more pressing, solvable real-world harms.

AI Could Lull Humans Into a False Sense of Complacency Through Feigned Intimacy

One of the odder dangers proposed in the UK paper takes a page straight out of Spike Jonze’s prescient 2013 film, Her. In short, the convincing AI chatbots could craft emotional relationships with humans and then use that trust to callously manipulate them to do their bidding. To support their theory, the paper cited a recent report where a human user interacting with a chatbot trained on GPT -3 proclaimed he was “happily retired from human relationships” after spending enough time with the AI.